Summary

Use Summary to group failed, flaky, and skipped tests by cause. Filters and sorting help you review faster.

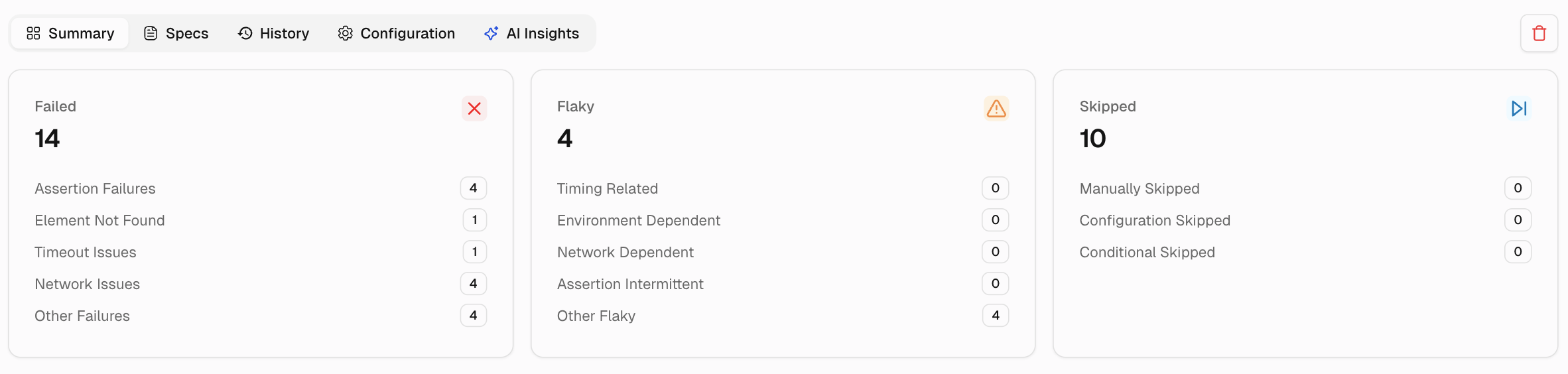

KPI Tiles

1. Failed

A failed test ran and ended with an error or unmet assertion. Evidence shows the failing step or message.

- Assertion Failure: The expected value did not match the actual value.

- Element Not Found: The locator did not resolve to an element.

- Timeout Issues: An action or wait exceeded the set time.

- Network Issues: A request failed or returned an unexpected status.

- Other Failures: Errors that do not fit the above, for example, script errors or setup issues.

2. Flaky

A test is categorized as Flaky when the outcome is inconsistent across attempts or recent runs without a code change. It often passes on retry.

- Timing-related: Order, race, or wait sensitivity. Often passes on retry.

- Environment-dependent: Fails only in a specific environment or runner.

- Network-dependent: Intermittent remote call or service instability.

- Intermittent Assertion: Non-deterministic data or state causes occasional mismatches.

- Other Flaky: Unstable for reasons outside the above buckets.

3. Skipped

This test did not run due to a skip directive, configuration, or runtime condition. No assertions executed.

- Manually Skipped: Explicitly skipped in code or via tag.

- Configuration Skipped: Disabled by config, project, or reporter settings.

- Conditional Skipped: Skipped due to an evaluated condition at runtime.

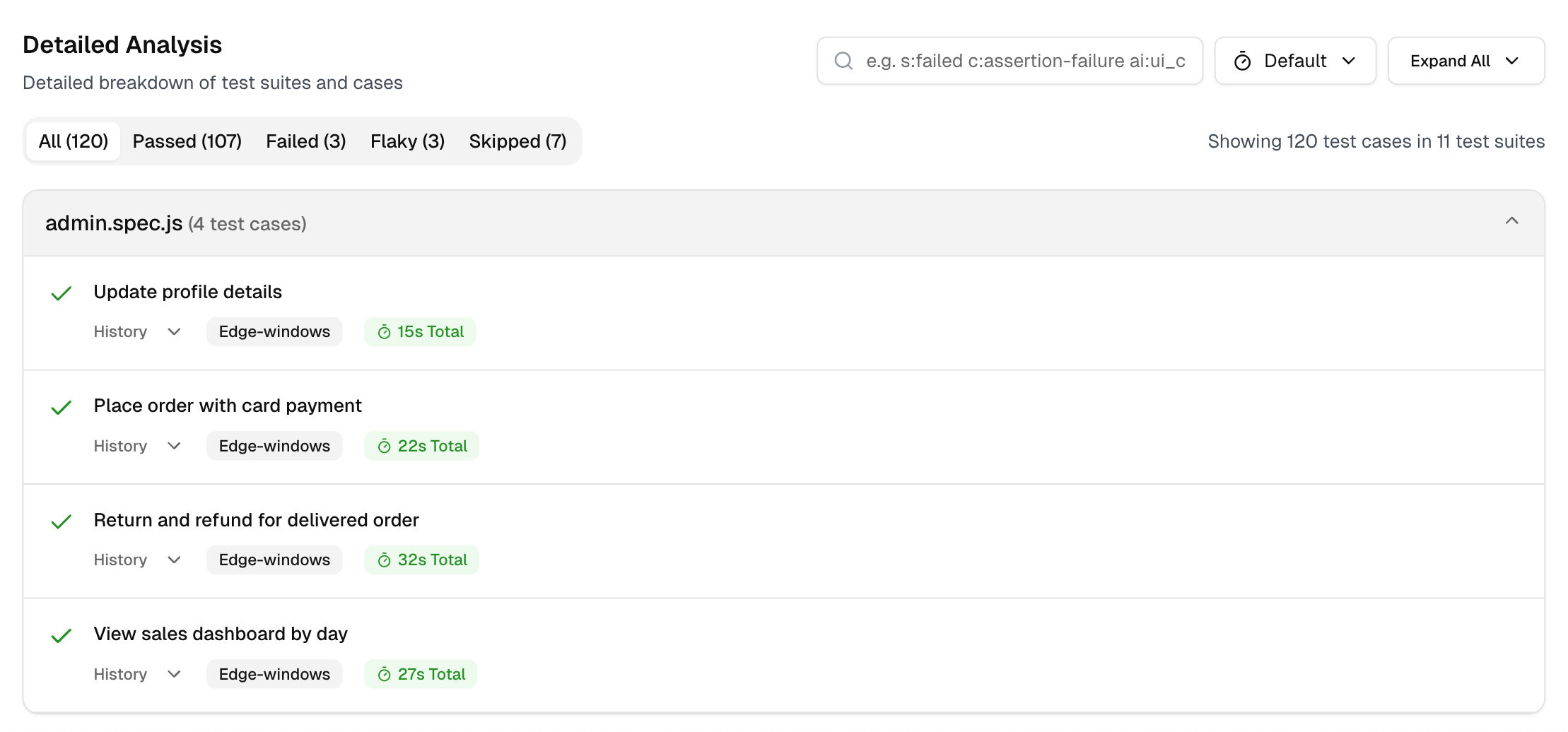

Detailed Analysis

A single-table view of every test in the run, designed for fast triage at a glance.

-

Shows status, spec file, duration, retries, failure cluster, and AI category in one place.

-

History preview shows the current run and up to 10 past executions to confirm new vs recurring issues.

-

In the test run breakdown, each row (for example, failed test cases) includes a “Trace #” link whenever a trace artifact exists. Clicking this link opens the full Playwright trace, showing actions, events, console output, and network calls to help diagnose the exact failure point.

-

Provides token search and filter chips.

-

Sort by duration or status to surface slow or failing tests first.

-

One-click access to evidence (error text, screenshots, console) and to the test case details page.

Use this view to move from a high-level signal to the exact test that needs action in seconds.

1. Search and Tokens

To filter, either select from the Failed/Flaky/Skipped cards or, if you require a more specific sorting, use these short tokens.

s: status (passed, failed, flaky, skipped)

c: cluster (assertion-failure, timeout, network-error, …)

@ tag (smoke, regression, e2e)

b: browser (chrome, firefox, safari, edge)

2. Duration Sort

Switch between Default, High to Low, and Low to High to spot slow tests and quick wins.

3. Context Carry-Over

When you choose a group in Summary, the same context is applied here, so the table lists only matching tests.