Test Cases

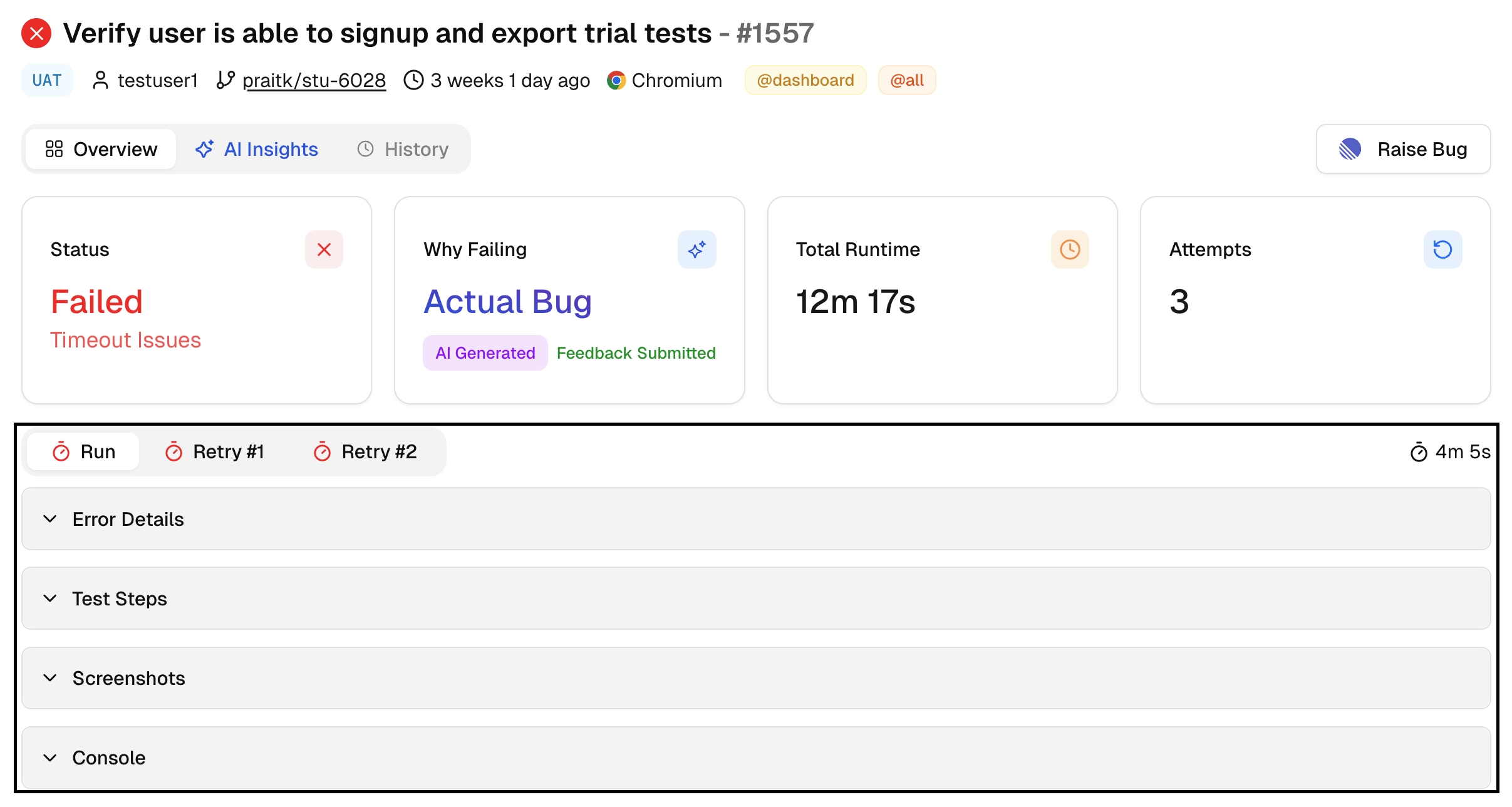

Inspect a single test with outcome, cause, runtime, attempts, and evidence. Compare attempts side by side to confirm fixes or flakiness.

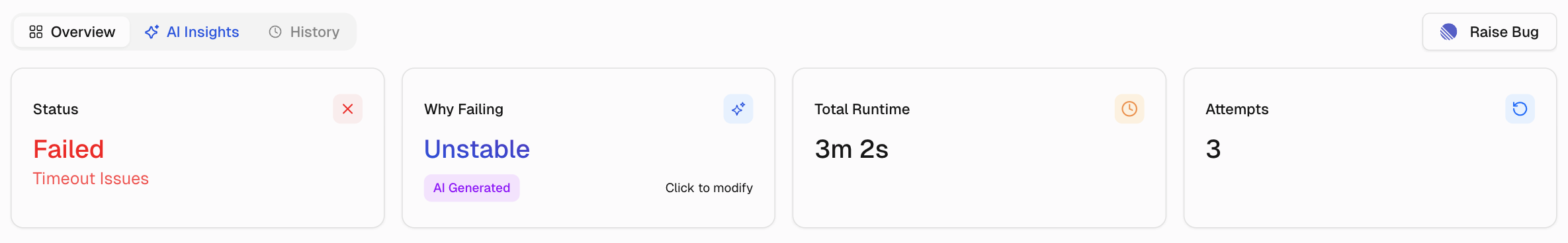

Overview

Summarize the current test’s state for this run. Show status, primary cause, runtime, attempts, and links to evidence.

KPI Tiles

1. Status

Outcome for this run: Passed, Failed, Skipped, or Flaky. When not passed, the primary technical cause is identified, allowing triage to start with context.

2. Why failing

AI category for the failure: Actual Bug, UI Change, Unstable, or Miscellaneous, with a confidence score. Helps decide whether to fix code, update selectors, or stabilize the test.

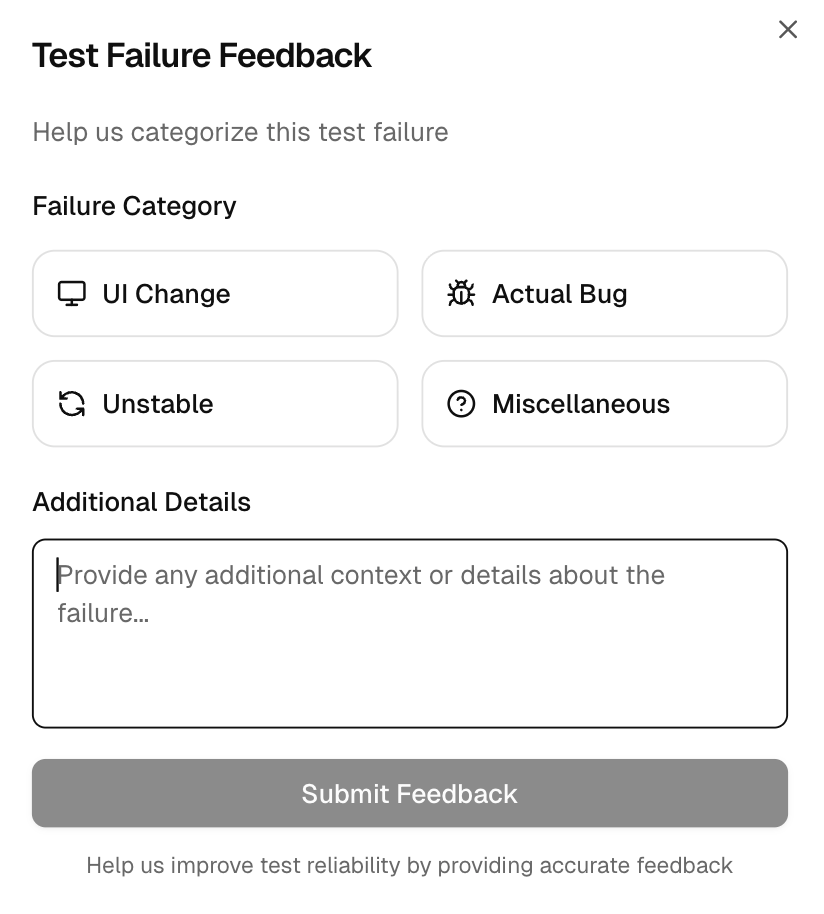

Feedback on classification

Available for failed or flaky tests. Use the Test Failure Feedback form to set the correct category and add optional context.

Your input updates this run and improves future classification, making AI Insights more reliable for the team.

3. Total runtime

Total execution time for this test in the current run. Useful for spotting slowdowns after code or configuration changes.

4. Attempts

Number of retries executed by your retry settings. A pass after a retry often signals instability that needs cleanup.

Evidence panels

Tabs per attempt (Run, Retry 1, Retry 2).

-

Error details - Exact error text and key line. Copy to reproduce locally or link in a ticket.

-

Test steps - Step list with per-step timing. Confirms where the error occurred in the flow.

-

Screenshots - Captured frames from the attempt. Validate UI state at the point of failure.

-

Console - Browser console output. Use it to correlate network or script errors with UI symptoms.

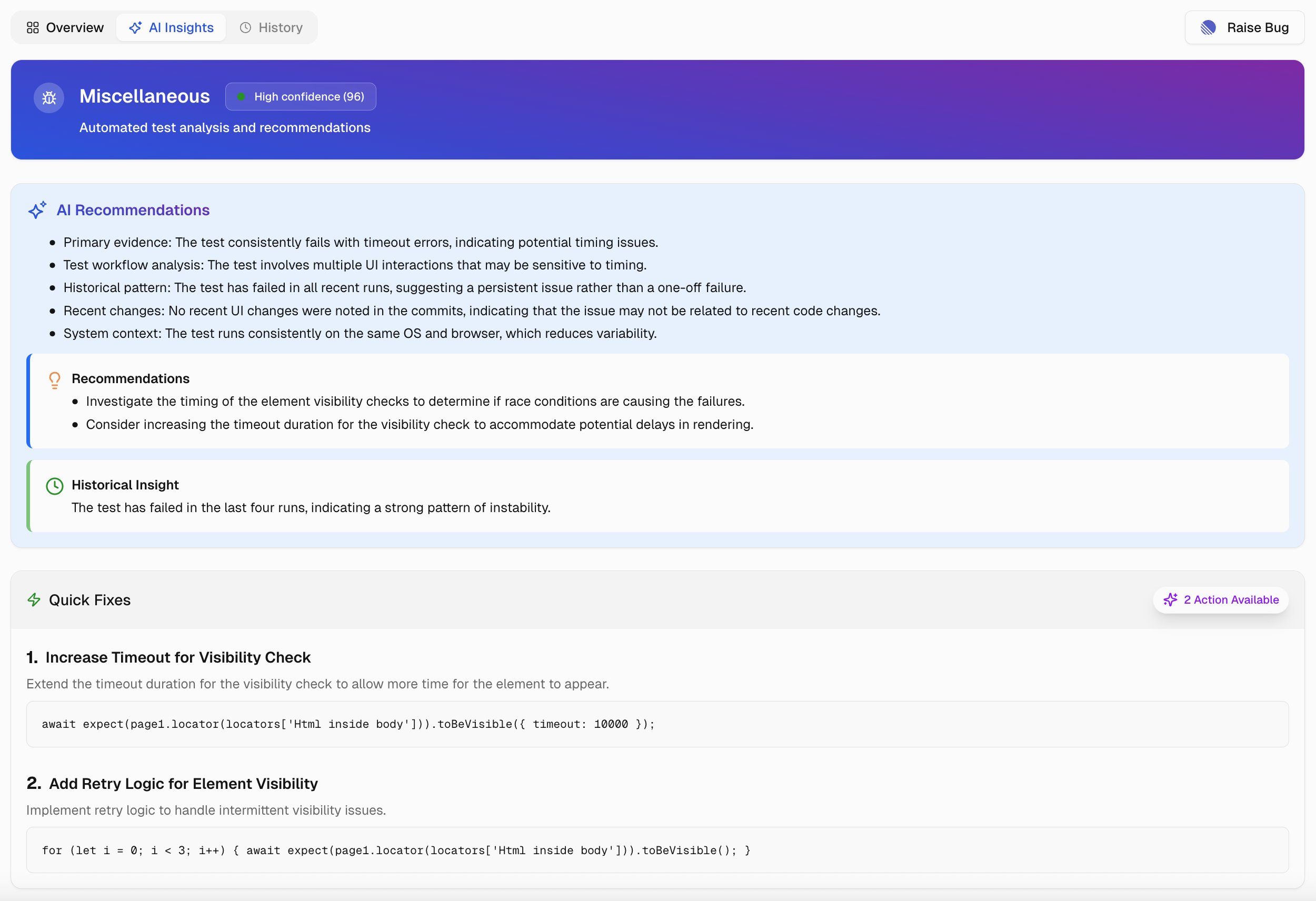

AI Insights

Explain ‵why this test failed′ or ‵was flaky′ and ‵what to do next′. Available for failed or flaky tests; includes confidence scores and next steps.

-

Category and confidence - Current AI label with score. You can update it via feedback.

-

AI recommendations - Primary evidence and likely cause, linked to recent changes when relevant.

-

Historical insight - Behavior across recent runs to show whether this is new or recurring.

-

Quick fixes - Targeted changes to try first. Validate locally or in a branch.

This analysis and recommendations are generated by artificial intelligence. While we strive for accuracy, please review and validate all suggestions before implementing them in your codebase. AI insights should be used as guidance, not as definitive solutions.