Check the Flaky Category

TestDino assigns a sub-category to each flaky test:| Category | What to investigate |

|---|---|

| Timing Related | Race conditions, animation waits, polling intervals |

| Environment Dependent | CI runner differences, resource constraints, parallel execution |

| Network Dependent | API timeouts, rate limits, service availability |

| Assertion Intermittent | Dynamic data, timestamps, random values |

Compare Passing and Failing Attempts

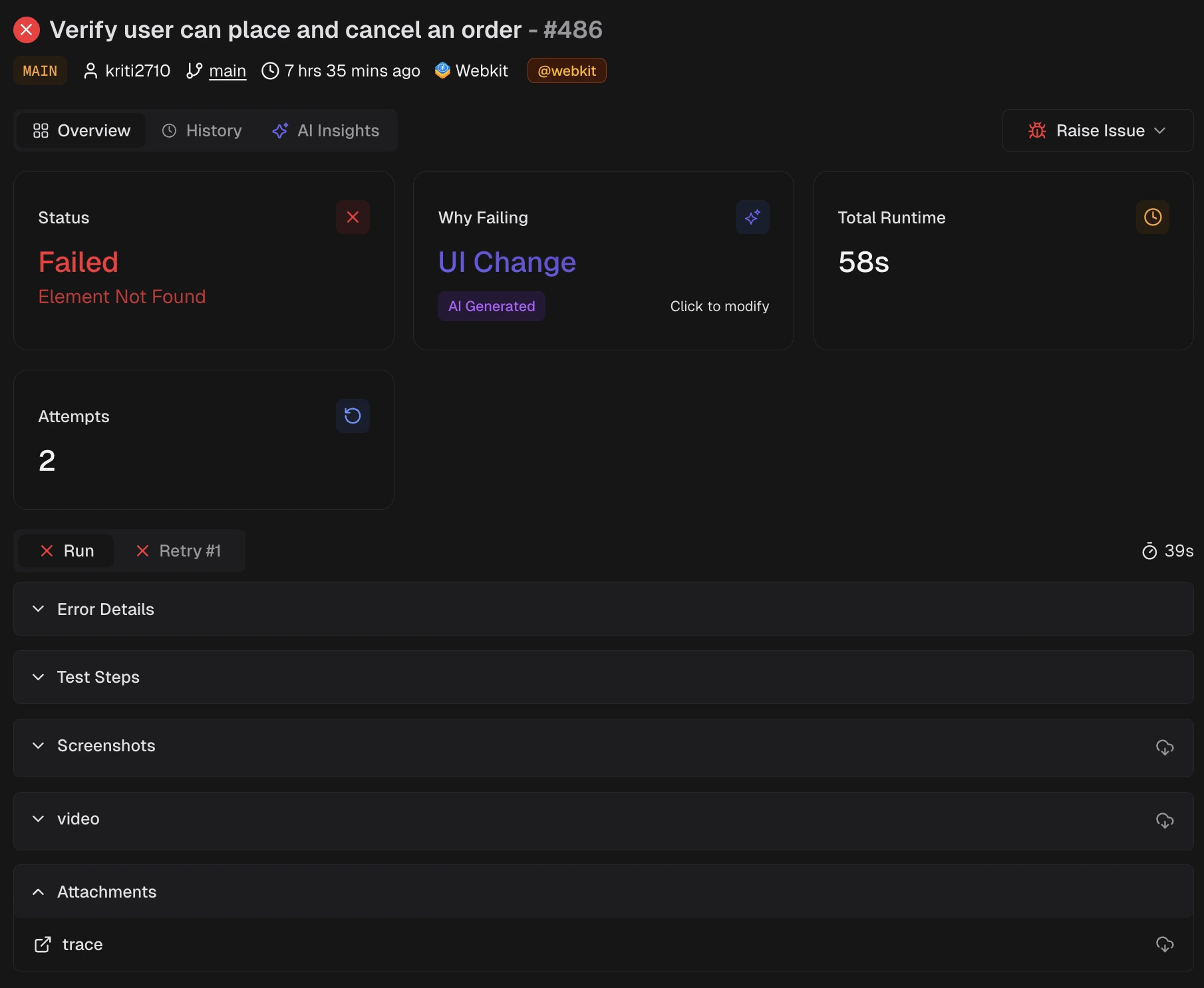

Open the test case details. The evidence panel shows tabs for each attempt: Run, Retry 1, Retry 2. Compare:

Compare:

- Screenshots: Look for UI differences between attempts

- Console logs: Check for errors or warnings that appear only in failures

- Network requests: Identify slow or failed API calls

- Timing: Note duration differences between attempts.

Use the Trace Viewer

If traces are enabled, open the trace for both passing and failing attempts. The trace shows:- Action timeline with exact timestamps

- Network requests and responses

- Console output

- DOM snapshots at each step

- Actions that take longer in failing runs

- Network requests that timeout or return errors

- Elements that appear at different times

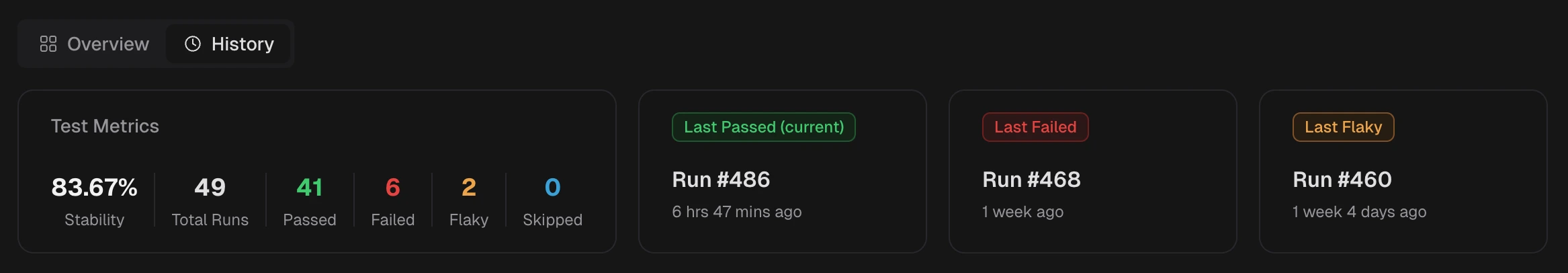

Review Test History

Open the History tab for the test case. Look for patterns:- Does it fail at specific times of day?

- Does it fail more on certain branches?

- Did flakiness start after a specific commit?

The execution history table shows status, duration, and retries for each run.

The execution history table shows status, duration, and retries for each run.

Check Environment Differences

Open Test Run → Configuration. Compare flaky rates across environments. If a test is flaky only in CI but not locally:- Check runner resource limits (CPU, memory)

- Verify browser versions match

- Look for parallel test interference

- Check environment-specific configuration

- Verify test data availability

- Look for service dependencies

Analyze Error Messages

Open the Errors tab in the test run. Group failures by error message. Common flaky error patterns:Timeout waiting for selector

Timeout waiting for selector

The element appears at inconsistent times. Add explicit waits or check for loading states.

Element is not visible

Element is not visible

The element exists but is hidden or obscured. Check for overlays, animations, or scroll position.

Assertion failed: expected X to equal Y

Assertion failed: expected X to equal Y

Dynamic data changes between runs. Mock the data or use flexible assertions.

Net::ERR_CONNECTION_REFUSED

Net::ERR_CONNECTION_REFUSED

Service is unavailable intermittently. Add retry logic or mock the endpoint.

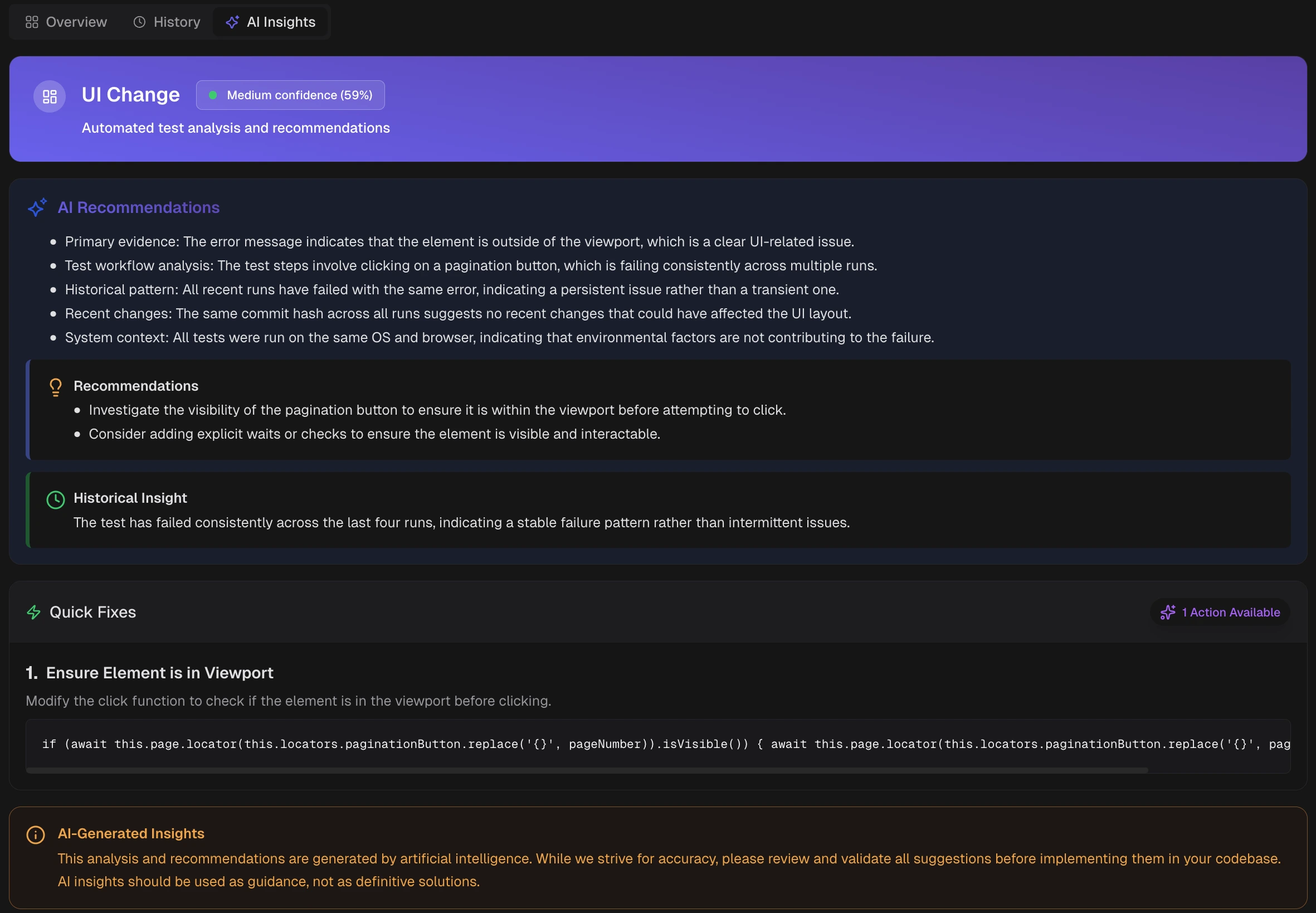

Use AI Insights

Open the AI Insights tab for the test case. TestDino provides:- Root cause based on error patterns

- Historical context from similar failures

- Suggested fixes

Document your findings

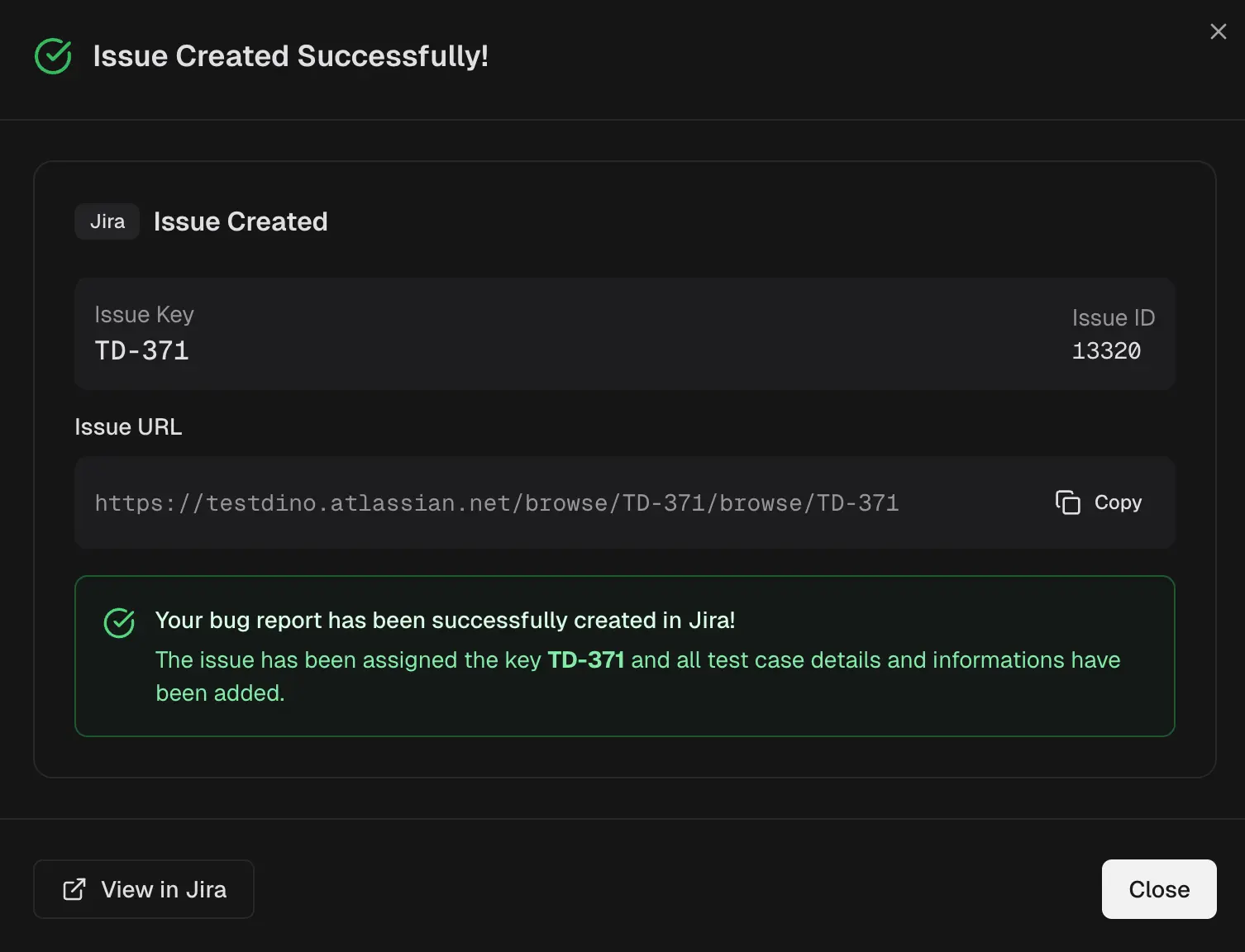

Once you identify the root cause:1

Create an issue

Create an issue in Jira, Linear, or Asana from TestDino

2

Include key details

Include the test name, flaky category, and evidence links

3

Reference specific runs

Reference specific runs that demonstrate the issue