Quick Reference

TestDino groups errors at two levels. Use this table to understand how grouping works and locate each view.| Grouping Level | What It Shows |

|---|---|

| Error message | Tests that failed with the same error text |

| Error category | Tests grouped by AI classification |

How Error Grouping Works

When multiple tests fail with similar errors, TestDino groups failures by error message. TestDino matches errors by:- Error message text

- Stack trace patterns

- Failure location in code

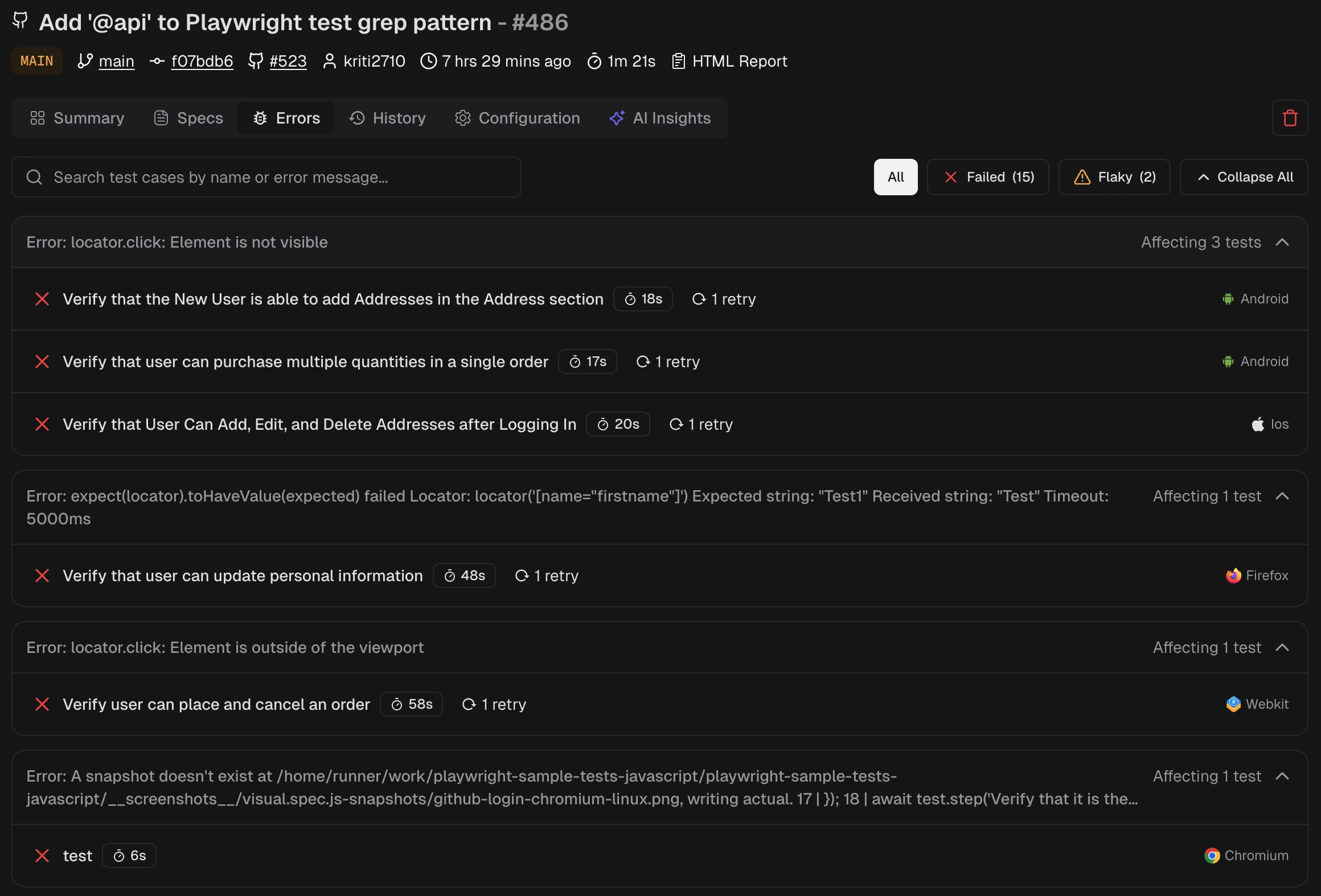

View Error Groups

- Open a test run

- Go to the Errors tab

- Expand an error group to see all affected tests

View Error Categories

Analytics shows error trends over time. A new error group appearing after a deployment indicates a regression.

| Category | Description |

|---|---|

| Assertion Failures | Expected values did not match actual values |

| Timeout Issues | Actions or waits exceeded time limits |

| Element Not Found | Locators did not resolve to elements |

| Network Issues | HTTP requests failed or returned errors |

| JavaScript Errors | Runtime errors in browser or test code |

| Browser Issues | Browser launch, context, or rendering problems |

| Other Failures | Errors outside the above categories |

AI Failure Classification

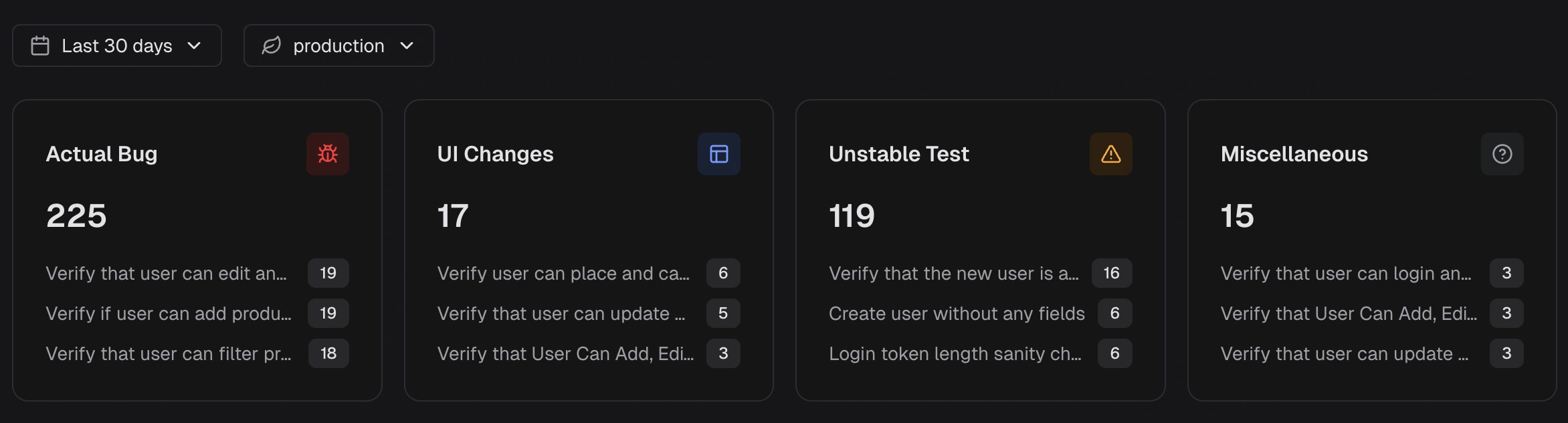

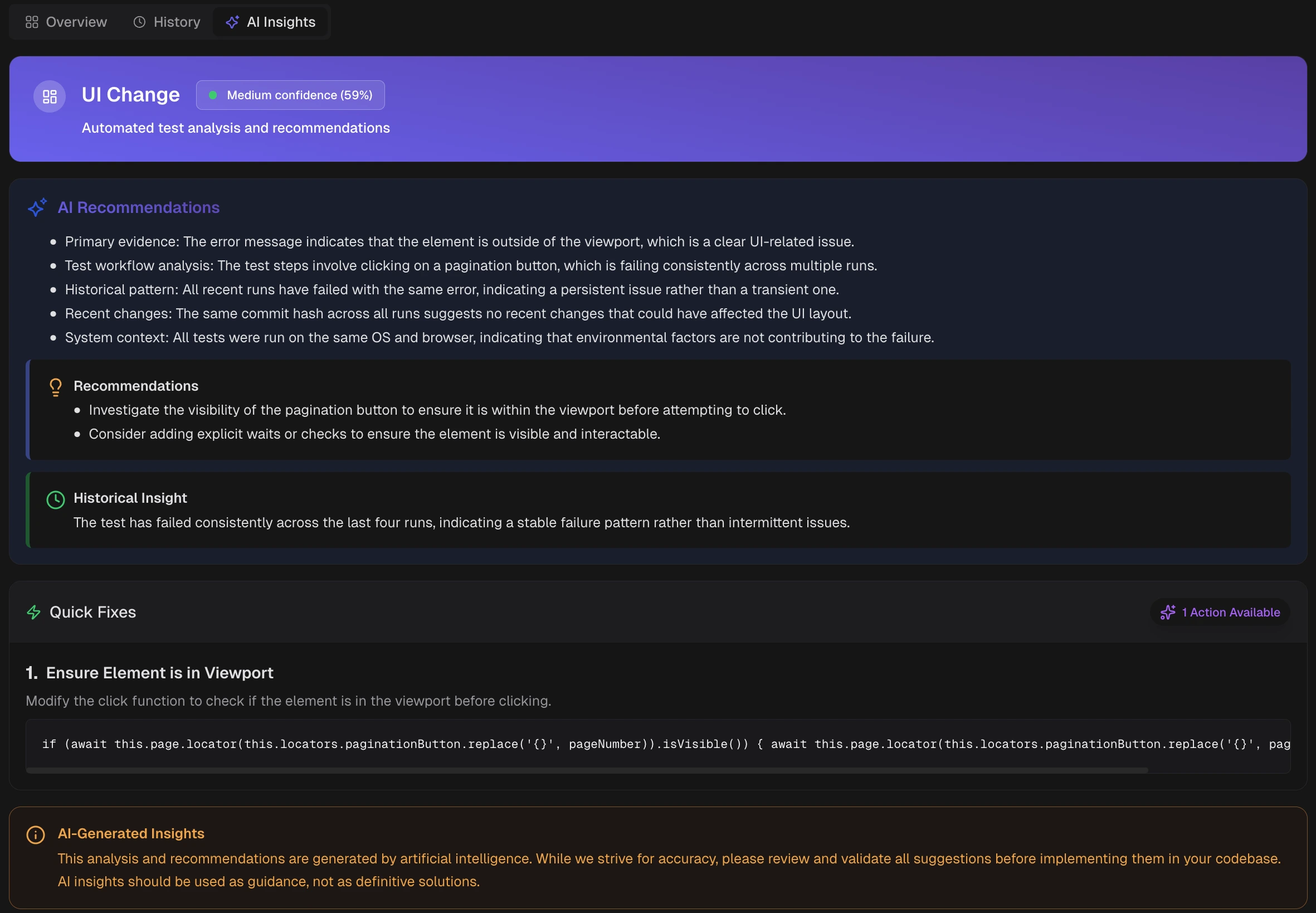

Error groups inherit AI classifications: Actual Bug: A repeatable product defect. The same error occurs across runs. Fix the application code. UI Change: The interface changed, and the test no longer matches. Update selectors or assertions. Unstable Test: Intermittent failure from timing, state, or environment issues. Stabilize the test. Miscellaneous: Environment or configuration problems. Fix infrastructure or CI setup. Each classification includes a confidence score.AI Insights View

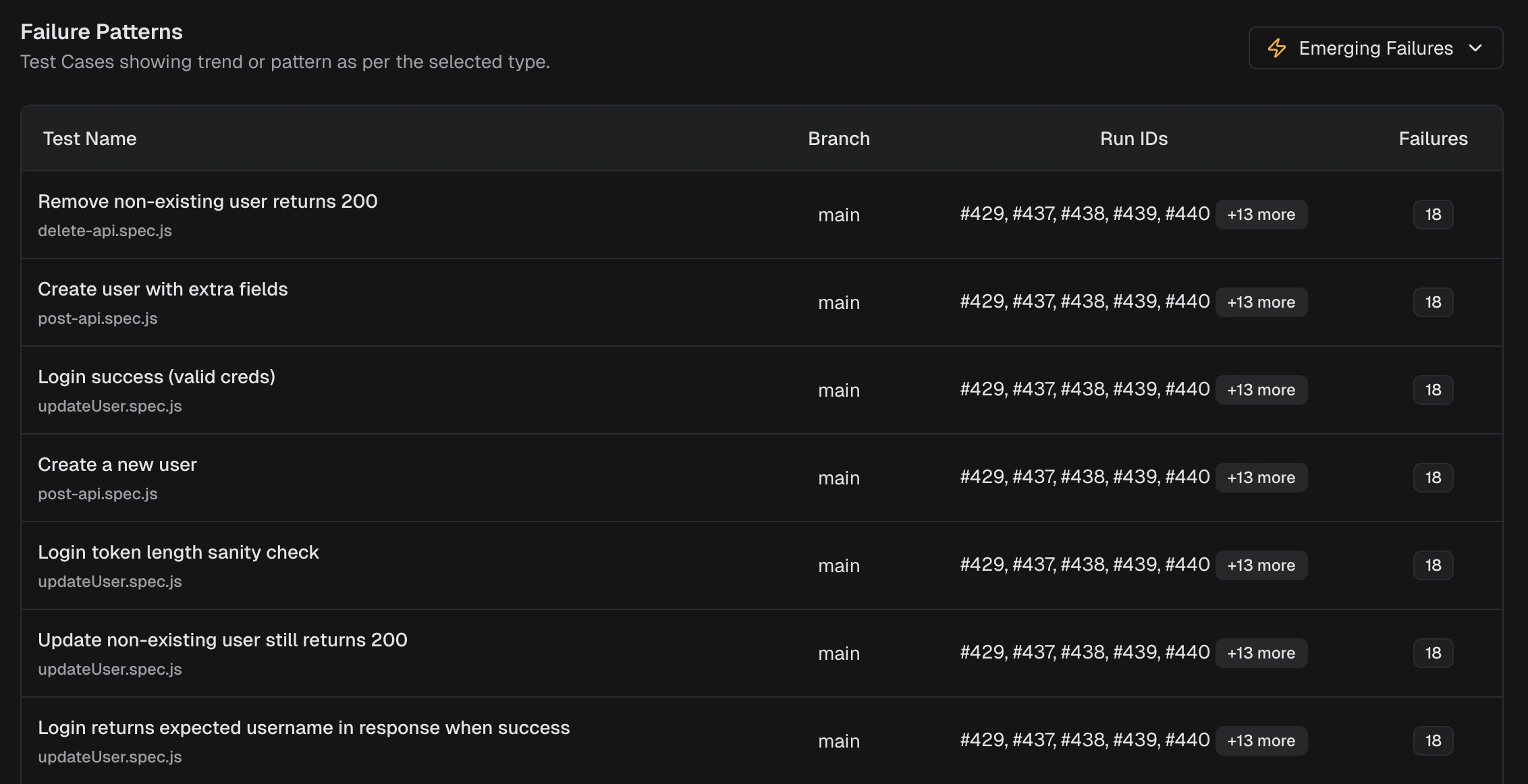

Open AI Insights from the sidebar for a cross-run view. This shows: Key Metrics: For each classification (Actual Bug, UI Change, Unstable Test, Miscellaneous), AI Insights shows the count and affected tests Failure Patterns

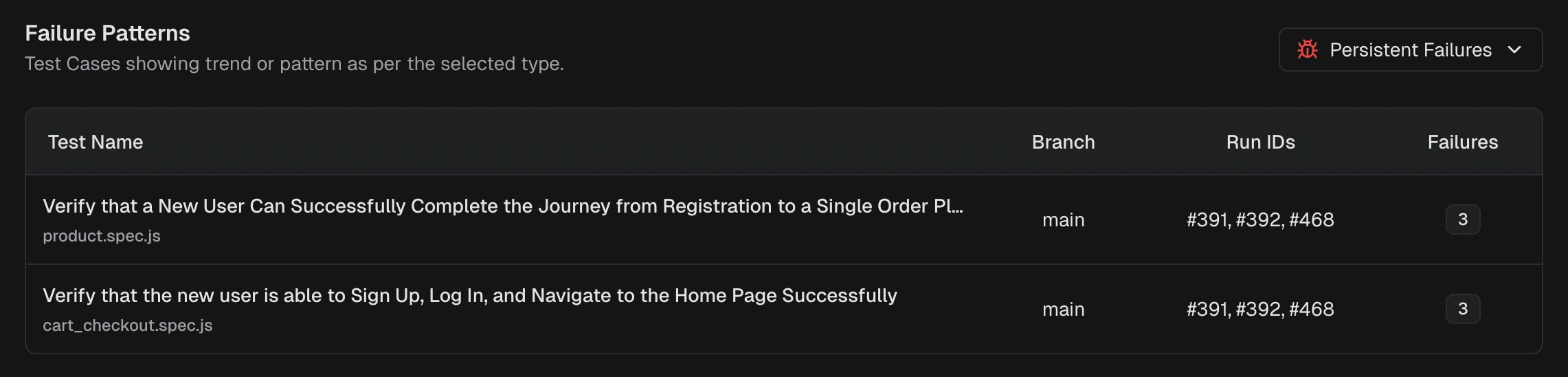

The same error appearing in multiple runs indicates:

Persistent Failures: Tests failing across multiple runs.

Failure Patterns

The same error appearing in multiple runs indicates:

Persistent Failures: Tests failing across multiple runs.

Emerging Failures: Tests that started failing recently.

Emerging Failures: Tests that started failing recently.

Test Case AI Insights

Open a specific test case and go to the AI Insights tab. This shows:- Category and confidence score

- Root cause analysis

- Historical context

- Suggested fixes

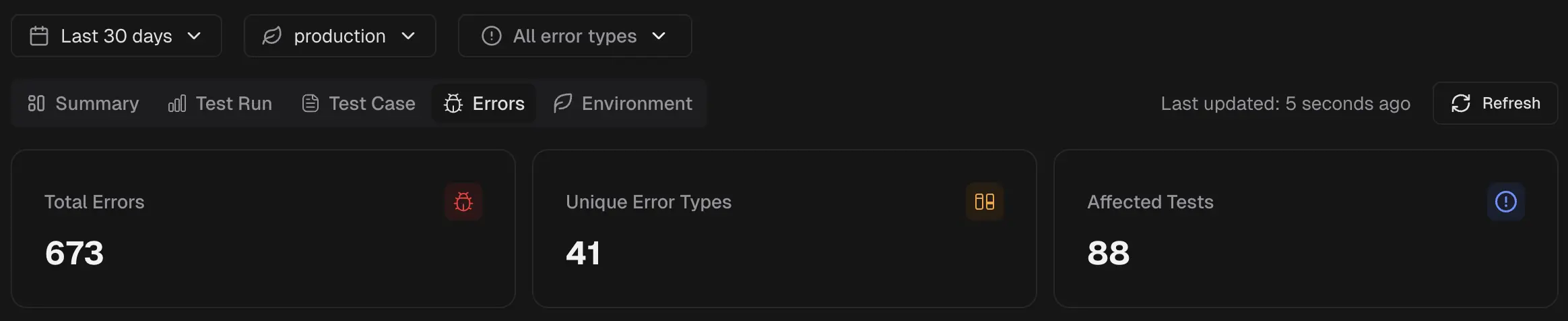

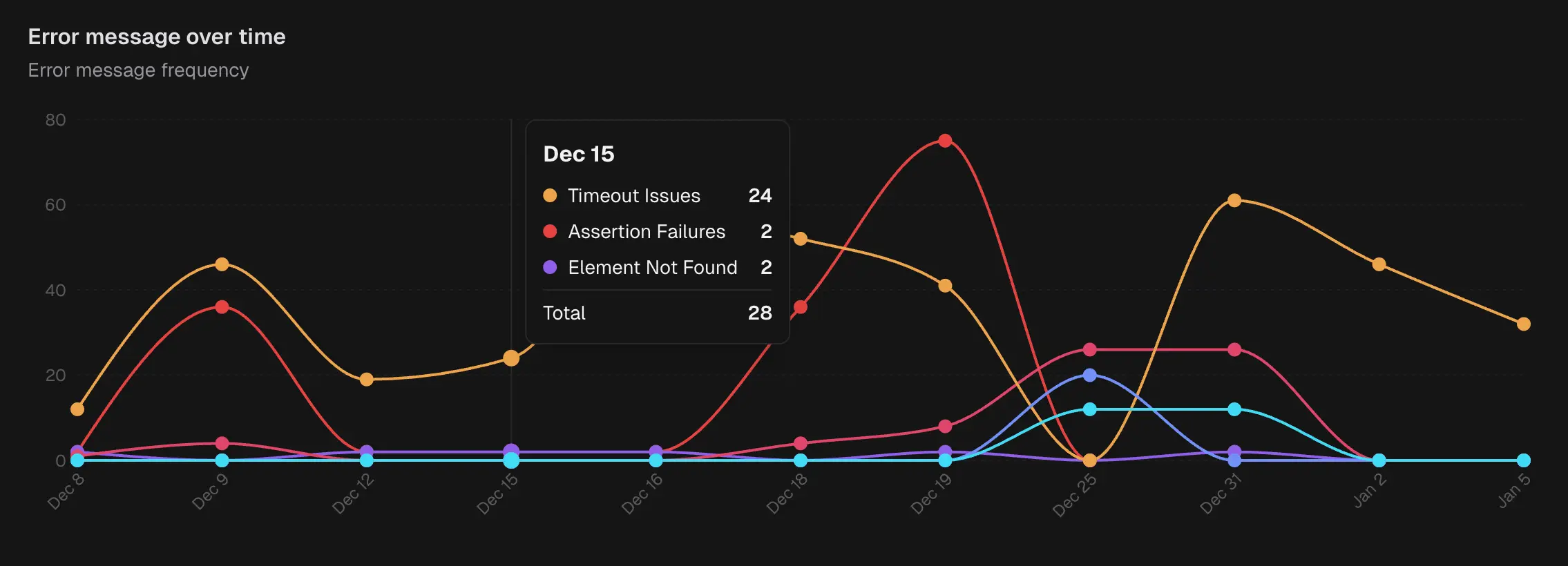

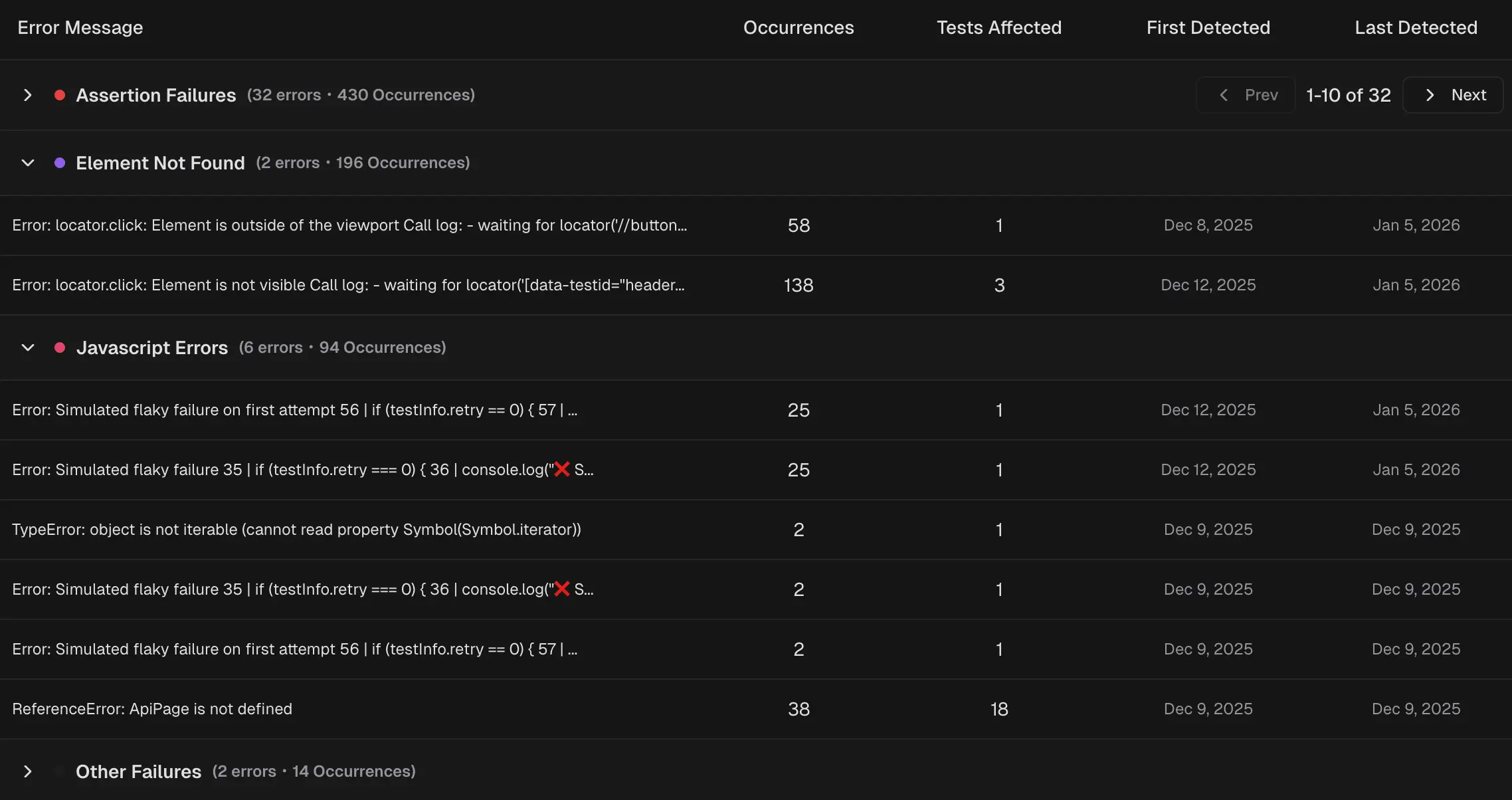

Error Analytics

Open Analytics → Errors for trends over time: Error Message Over Time: Line graph showing error frequency by category. Identify spikes and trends. Error Categories Table: Breakdown of errors by type with occurrence counts, affected tests, and first/last detected dates.

Error Categories Table: Breakdown of errors by type with occurrence counts, affected tests, and first/last detected dates.

Click any error to see all affected test cases.

Click any error to see all affected test cases.

Common Error Patterns

Element Not Found

Element Not Found

Error: locator.click: Error: strict mode violationMultiple tests targeting the same element fail when the selector breaks. Fix the selector once.Timeout

Timeout

Error: Timeout 30000ms exceededOften indicates a shared dependency: slow API, missing service, or environment issue. Check what the affected tests have in common.Assertion Failure

Assertion Failure

Error: expect(received).toBe(expected)Same assertion failing across tests may indicate a data issue or application bug affecting multiple pages.Network Error

Network Error

Error: net::ERR_CONNECTION_REFUSEDService unavailable. All tests depending on that service fail together.Create Tickets from Error Groups

When an error group needs attention:

See also: AI Insights for detailed classification information