TestDino uses AI across the platform to classify failures, detect patterns, and recommend fixes. Every AI feature works on real execution data from your test runs.

All AI features are enabled by default. Disable individual features or all AI analysis from Project Settings. Changes apply from the next test run. Quick Reference

| Feature | Where | What it does |

|---|

| Failure Classification | Test runs, test cases, dashboard | Labels failures as Bug, UI Change, Unstable, or Misc |

| Failure Patterns | AI Insights, error grouping | Identifies persistent and emerging failures across runs |

| Test Case Analysis | Individual test cases | Provides root cause, recommendations, and quick fixes |

| Error Grouping | Test runs, analytics | Groups similar errors by message and stack trace |

| QA Dashboard | Dashboard | Summarizes failure categories and trends |

| MCP Integration | AI assistants | Connects Claude, Cursor, and other AI tools to your test data |

Failure Classification

Every failed test receives an AI-assigned category with a confidence score.

| Category | Meaning |

|---|

| Actual Bug | Consistent failure indicating a product defect. Fix first. |

| UI Change | Selector or DOM change broke a test step. Update locators. |

| Unstable Test | Intermittent failure that passes on retry. Stabilize or quarantine. |

| Miscellaneous | Setup, data, or CI issue outside the above categories. |

Correct misclassifications through the feedback form on any test case. This improves future analysis.

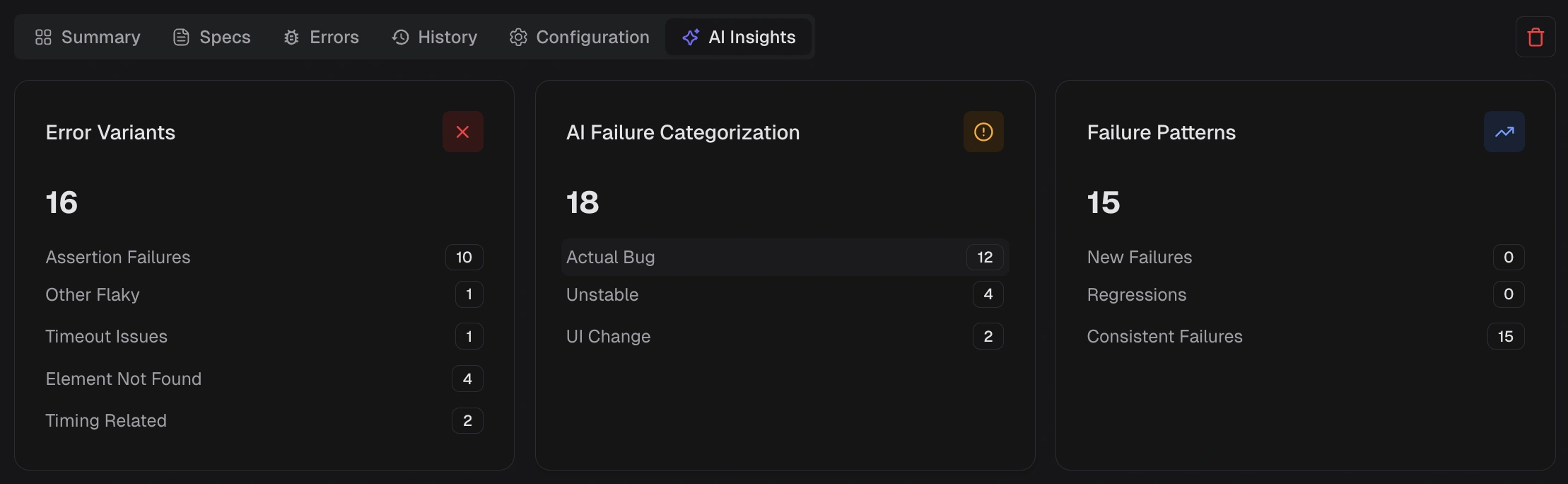

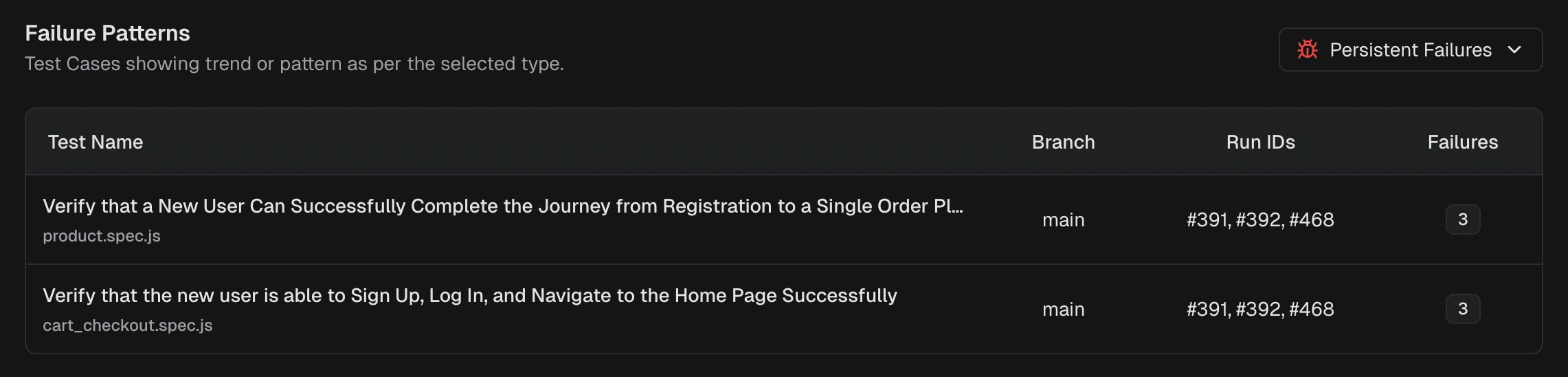

Failure Patterns

AI Insights identifies how failures behave across recent runs.

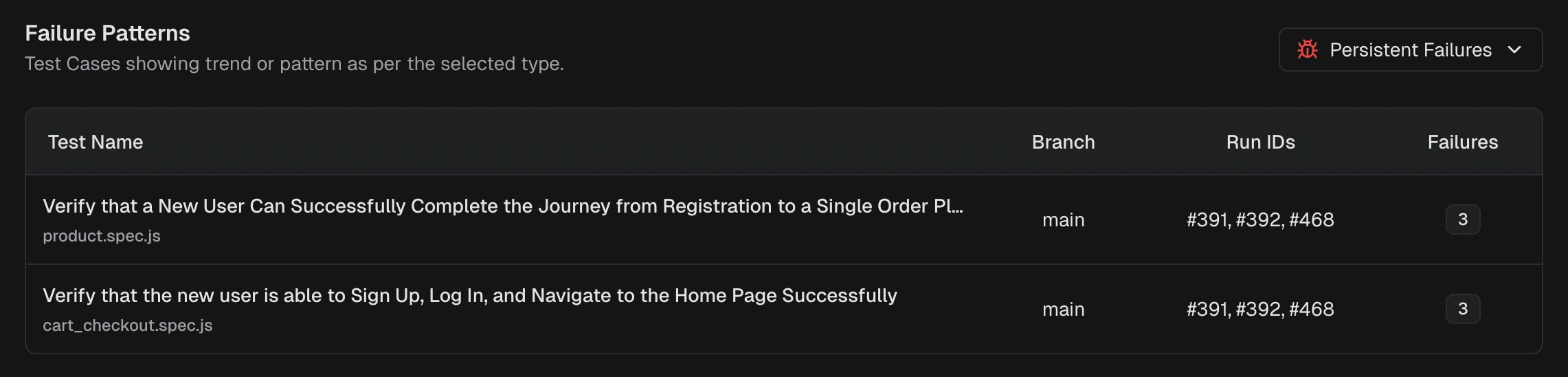

Persistent Failures

Tests failing across multiple runs in the selected window. These are high-impact, recurring problems.

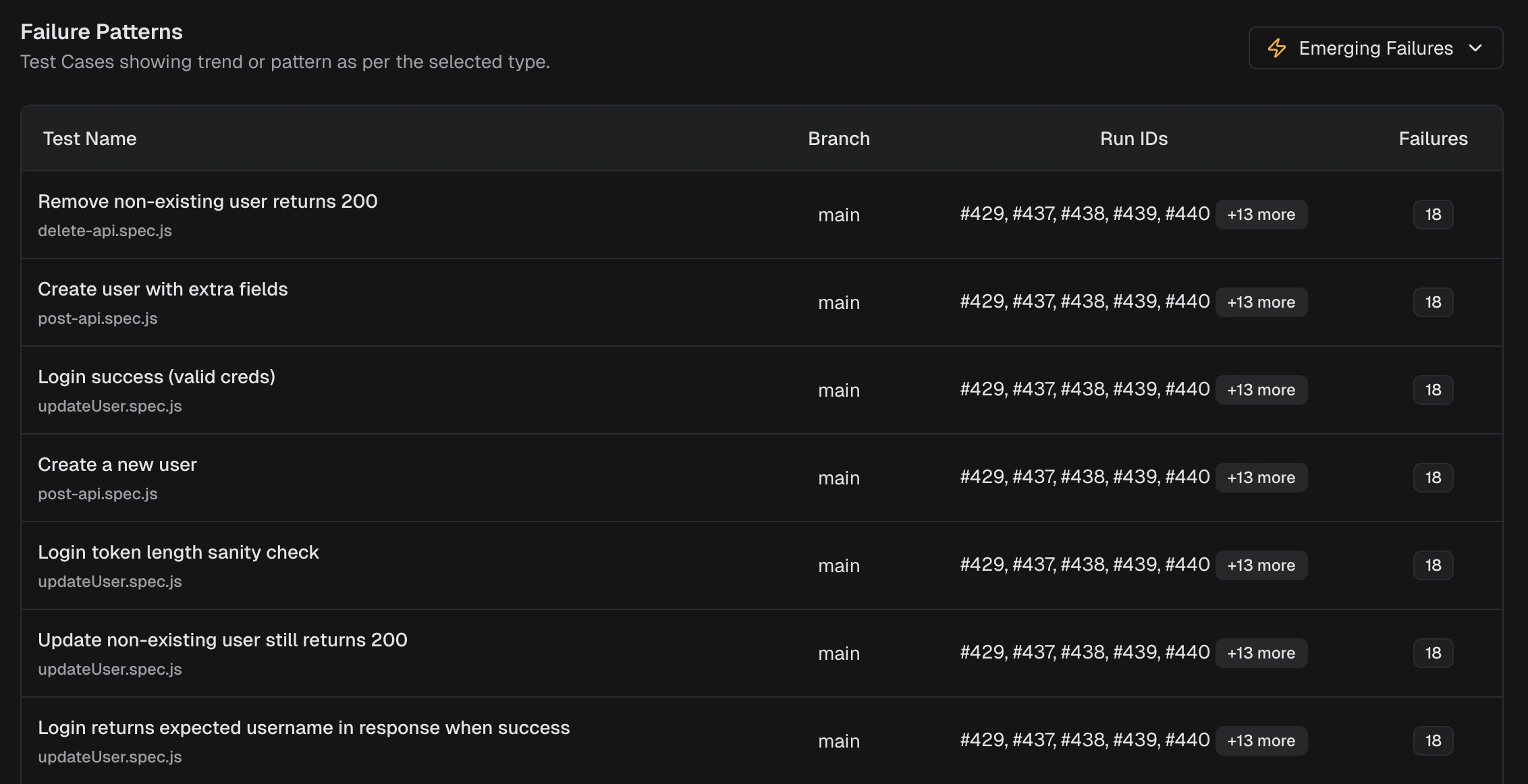

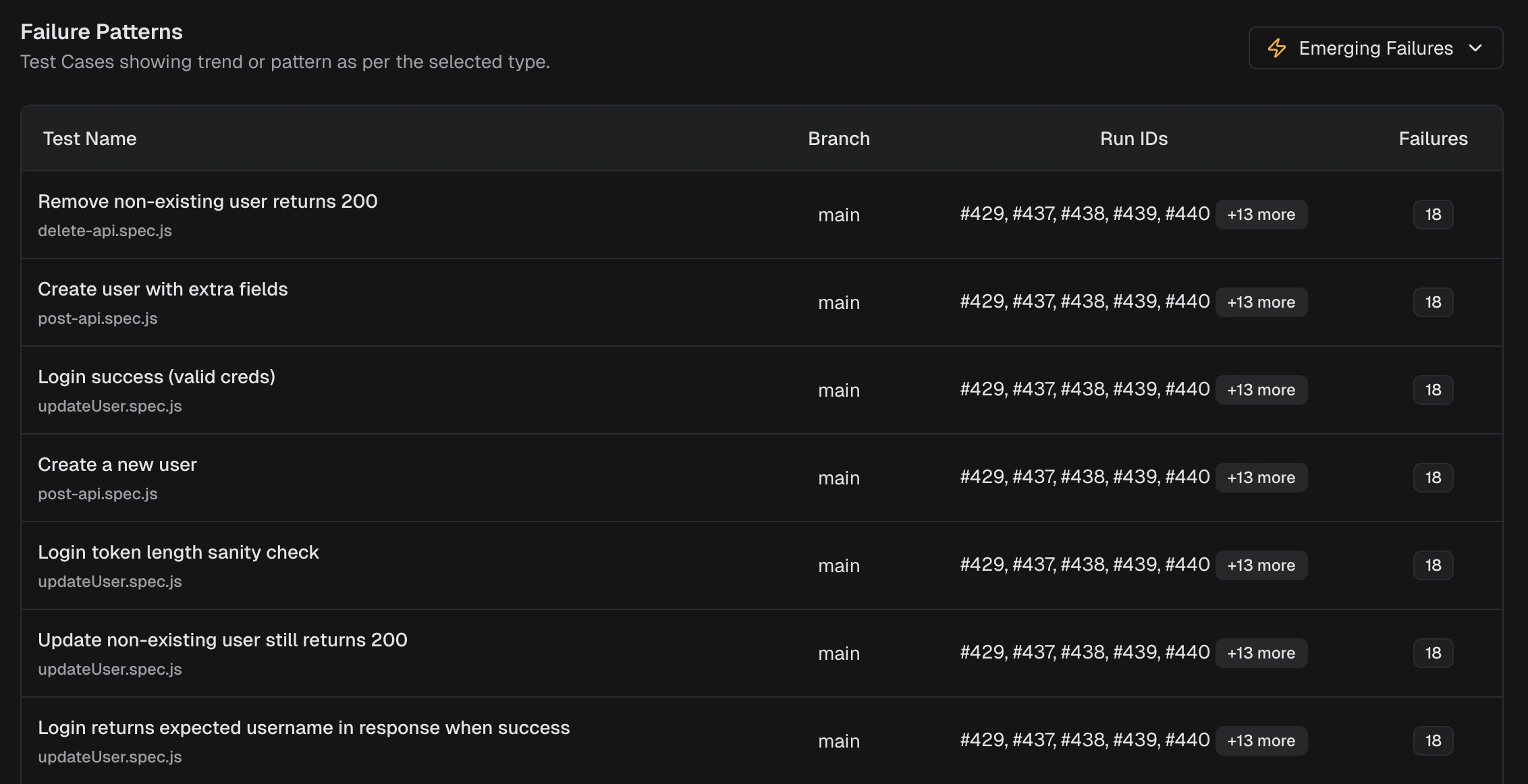

Emerging Failures

Tests that started failing recently and are appearing again. Catch regressions early.

Pattern types also include:

Pattern types also include:

- New Failures — tests that started failing within the selected window

- Regressions — tests that passed recently but now fail again

- Consistent Failures — tests failing across most or all recent runs

See AI Insights for the full cross-run view.

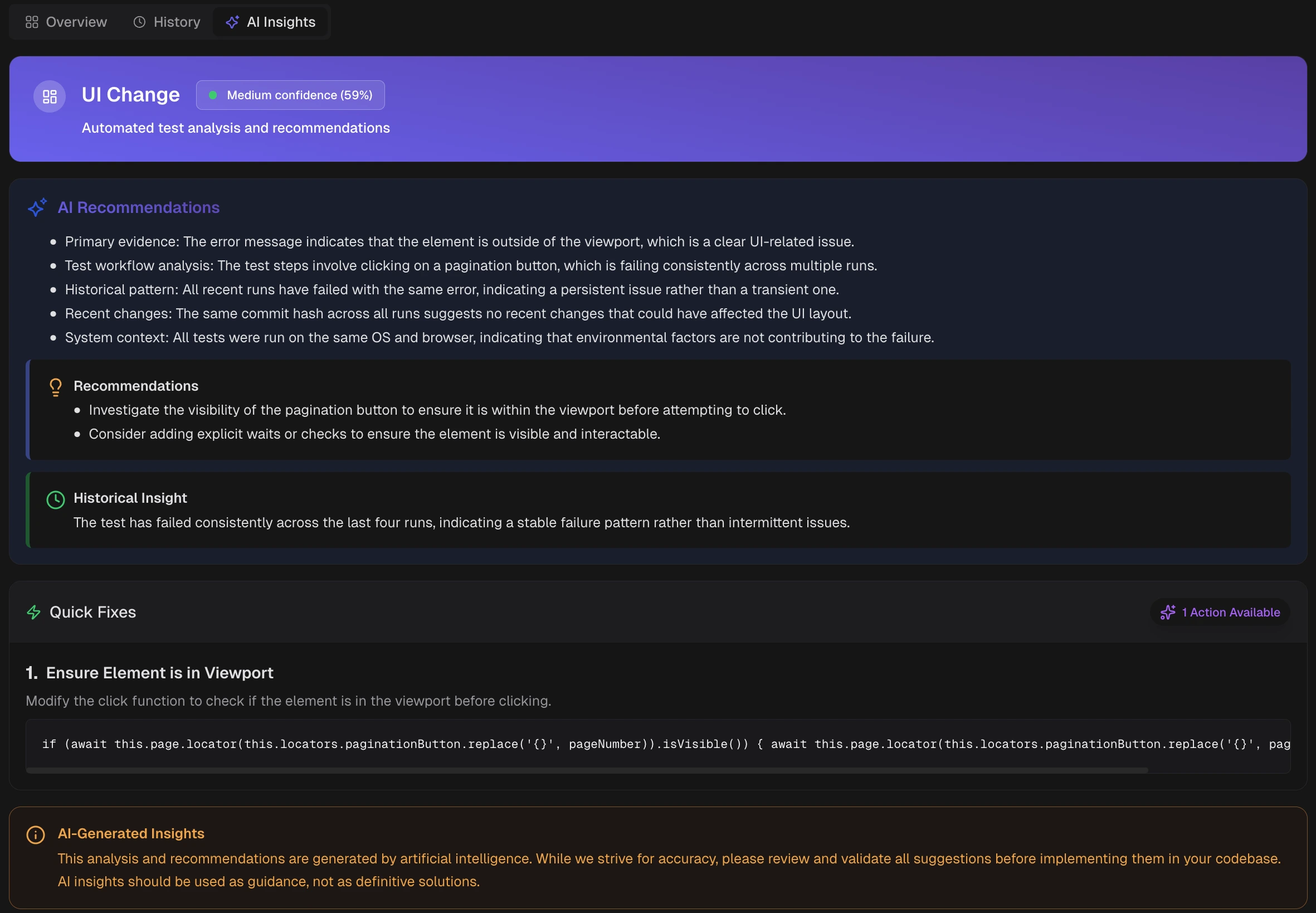

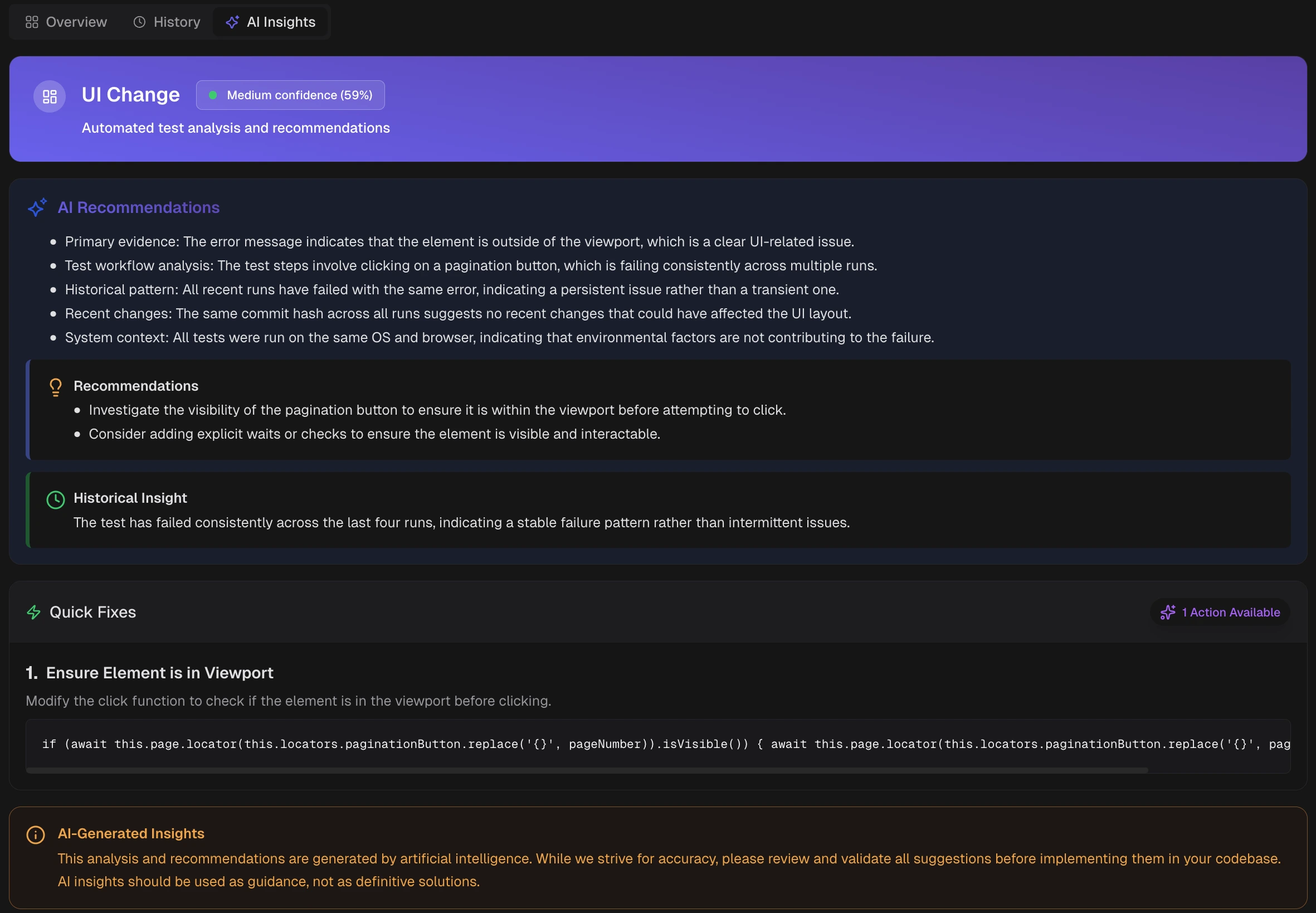

Test Case Analysis

For each failed or flaky test, AI provides a detailed breakdown.

| Section | What it provides |

|---|

| Category and Confidence | AI label with confidence score |

| Recommendations | Primary evidence and likely cause |

| Historical Insight | Behavior across recent runs (new or recurring) |

| Quick Fixes | Targeted changes to try first |

AI-generated recommendations are guidance, not definitive solutions. Validate suggestions before implementing them.

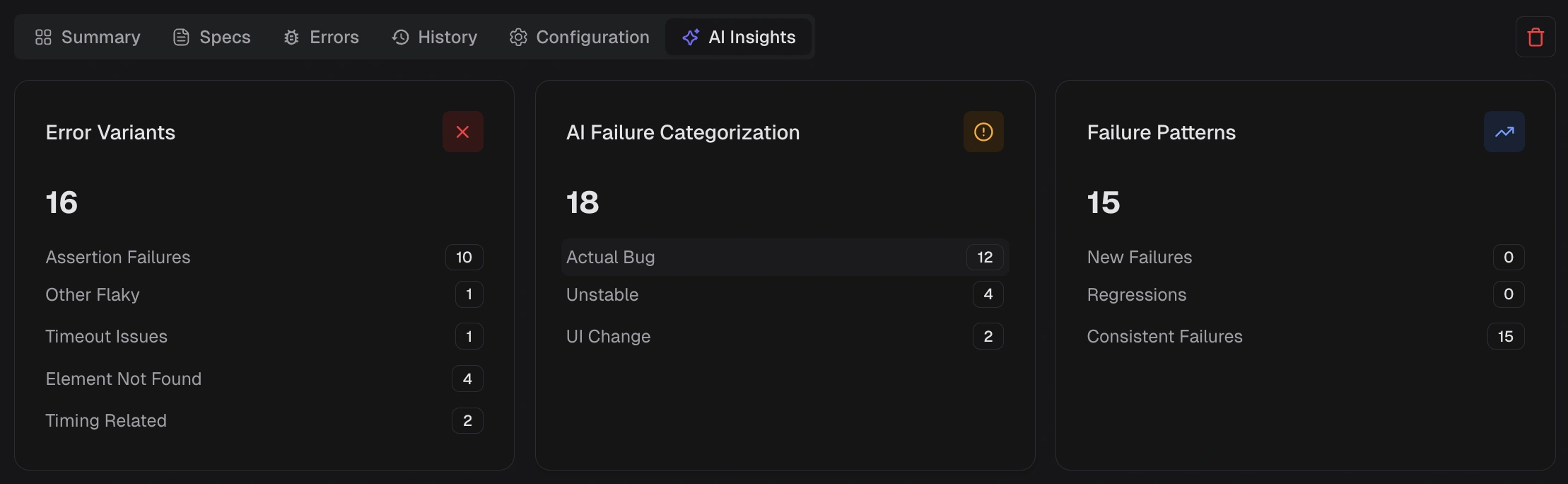

Error Grouping

AI groups similar errors by message text, stack trace patterns, and failure location.

Error types include:

- Assertion Failures

- Timeout Issues

- Element Not Found

- Network Issues

- JavaScript Errors

- Browser Issues

Selecting any KPI tile (variant, category, or pattern) filters the error analysis table to matching tests.

See Error Grouping and Error Analytics for details.

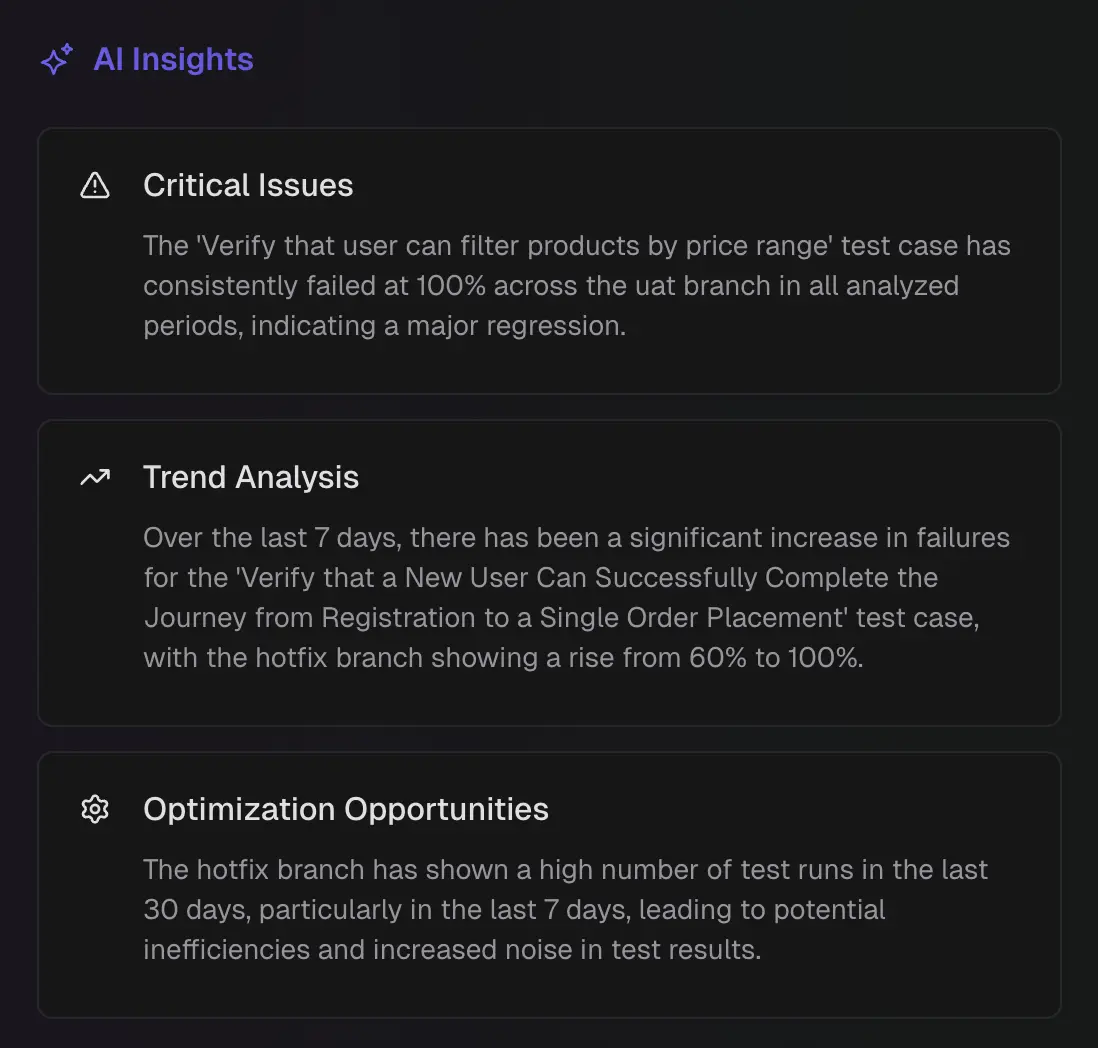

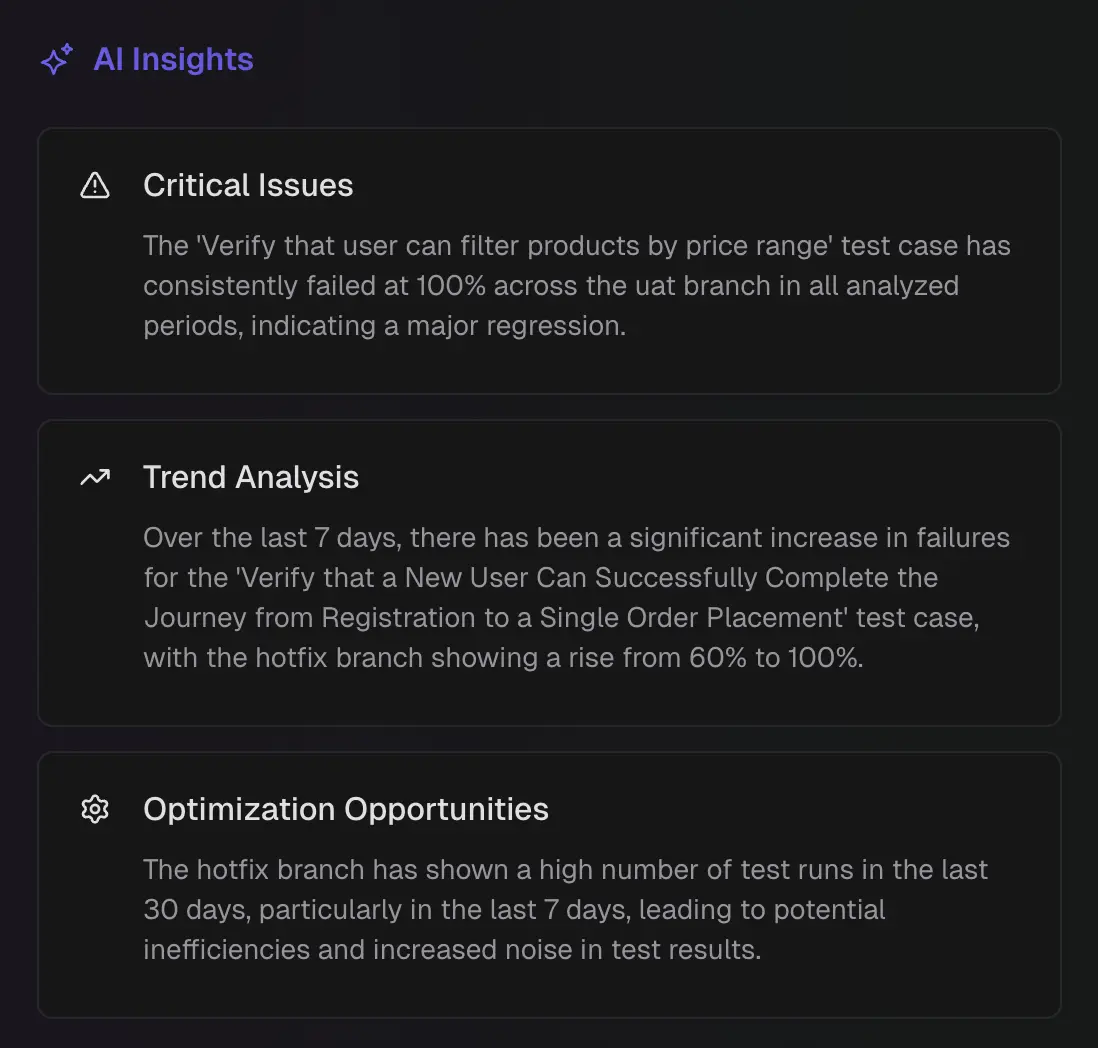

QA Dashboard

The QA Dashboard surfaces AI failure categories at a glance.

Each category shows the total count and top-impacted tests. The dashboard also highlights:

Each category shows the total count and top-impacted tests. The dashboard also highlights:

- Critical issues — highest impact failures

- Trend analysis — rising or repeating patterns

- Optimization opportunities — speed and stability improvements

See QA Dashboard for the full view.

MCP Integration

Connect AI assistants (Claude, Cursor) to your TestDino workspace through the MCP server. Assistants query real test data, investigate failures, and suggest fixes without context switching.

Key capabilities:

- Inspect runs by branch, environment, time window, author, or commit

- Fetch full test case debugging context (logs, traces, screenshots, video)

- Root cause analysis with failure pattern detection

- Fix recommendations based on historical execution data

See TestDino MCP for setup and configuration.

Explore AI features across test runs, test cases, and the MCP integration.

Pattern types also include:

Pattern types also include:

Each category shows the total count and top-impacted tests. The dashboard also highlights:

Each category shows the total count and top-impacted tests. The dashboard also highlights: