Quick Reference

| View | Path | Best for |

|---|---|---|

| QA Dashboard | Dashboard → QA View | Team-wide flaky overview |

| Developer Dashboard | Dashboard → Developer View | Author-specific flaky tests |

| Analytics Summary | Analytics → Summary | Flakiness trends |

| Test Run Summary | Test Runs → Summary | Per-run flaky breakdown |

| Test Case History | Test Case → History | Single test stability |

| Test Explorer | Test Explorer | Flaky rate by file and test case |

| Cross-Environment | Dashboard → QA View | Flaky rates per environment |

How Detection Works

Flaky test detection activates automatically when retries are enabled in Playwright. No additional configuration required.playwright.config.ts

Across multiple runs. Tests with inconsistent outcomes on the same code are flagged. TestDino tracks pass/fail patterns and calculates a stability percentage.

Across multiple runs. Tests with inconsistent outcomes on the same code are flagged. TestDino tracks pass/fail patterns and calculates a stability percentage.

Both detection methods indicate that the test result depends on something other than your code.

Flaky Test Categories

TestDino classifies flaky tests by root cause:| Category | Description |

|---|---|

| Timing Related | Race conditions, order dependencies, and insufficient waits |

| Environment Dependent | Fails only in specific environments or runners |

| Network Dependent | Intermittent API or service failures |

| Assertion Intermittent | Non-deterministic data causes occasional mismatches |

| Other | Unstable for reasons outside the above |

Common causes

- Fixed waits instead of waiting for the page to be ready

- Missing

awaitcauses steps to run out of order - Weak selectors that match more than one element

- Tests share data and affect each other

- Parallel runs collide on the same user or record

- Slow or unstable network or third-party APIs

- CI setup differs from local environment

Where to Find Flaky Tests

QA Dashboard

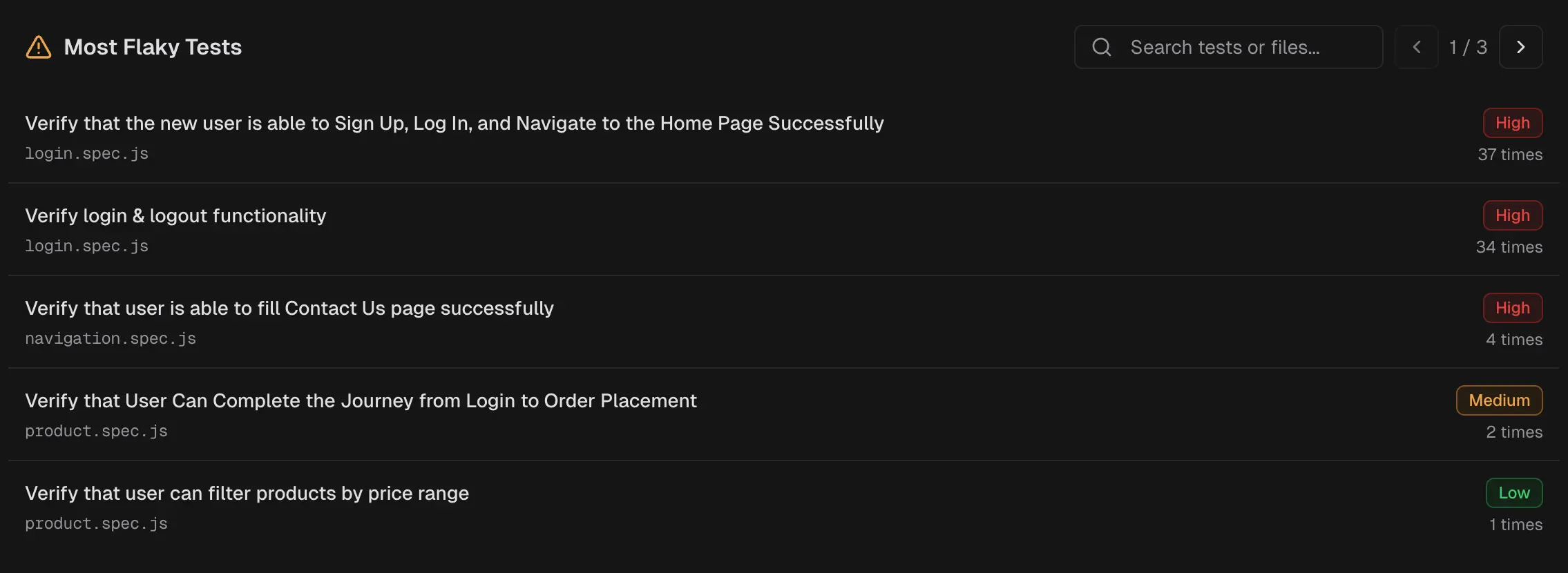

Open Dashboard → QA View. The Most Flaky Tests panel lists tests with the highest flaky rates in the selected period and environment. Each entry shows the test name, spec file, flaky rate percentage, and a link to the latest run. Click any test to open its most recent execution.

Developer Dashboard

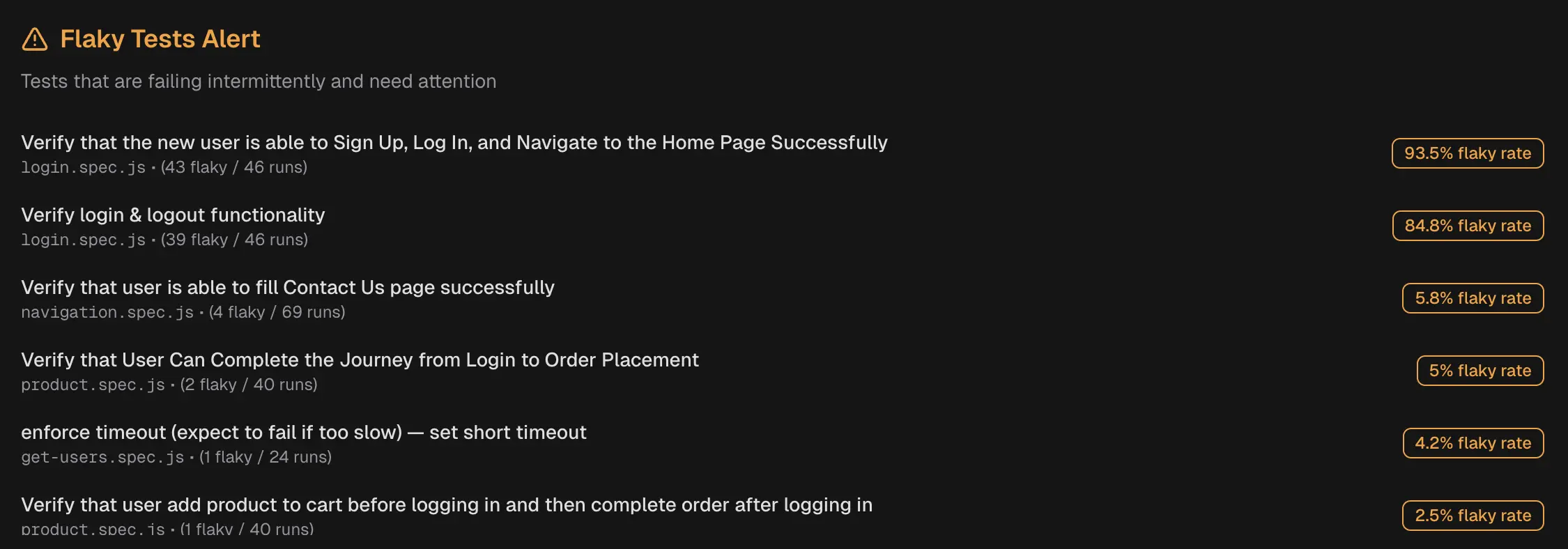

Open Dashboard → Developer View. The Flaky Tests Alert panel shows flaky tests filtered by author.

Analytics Summary

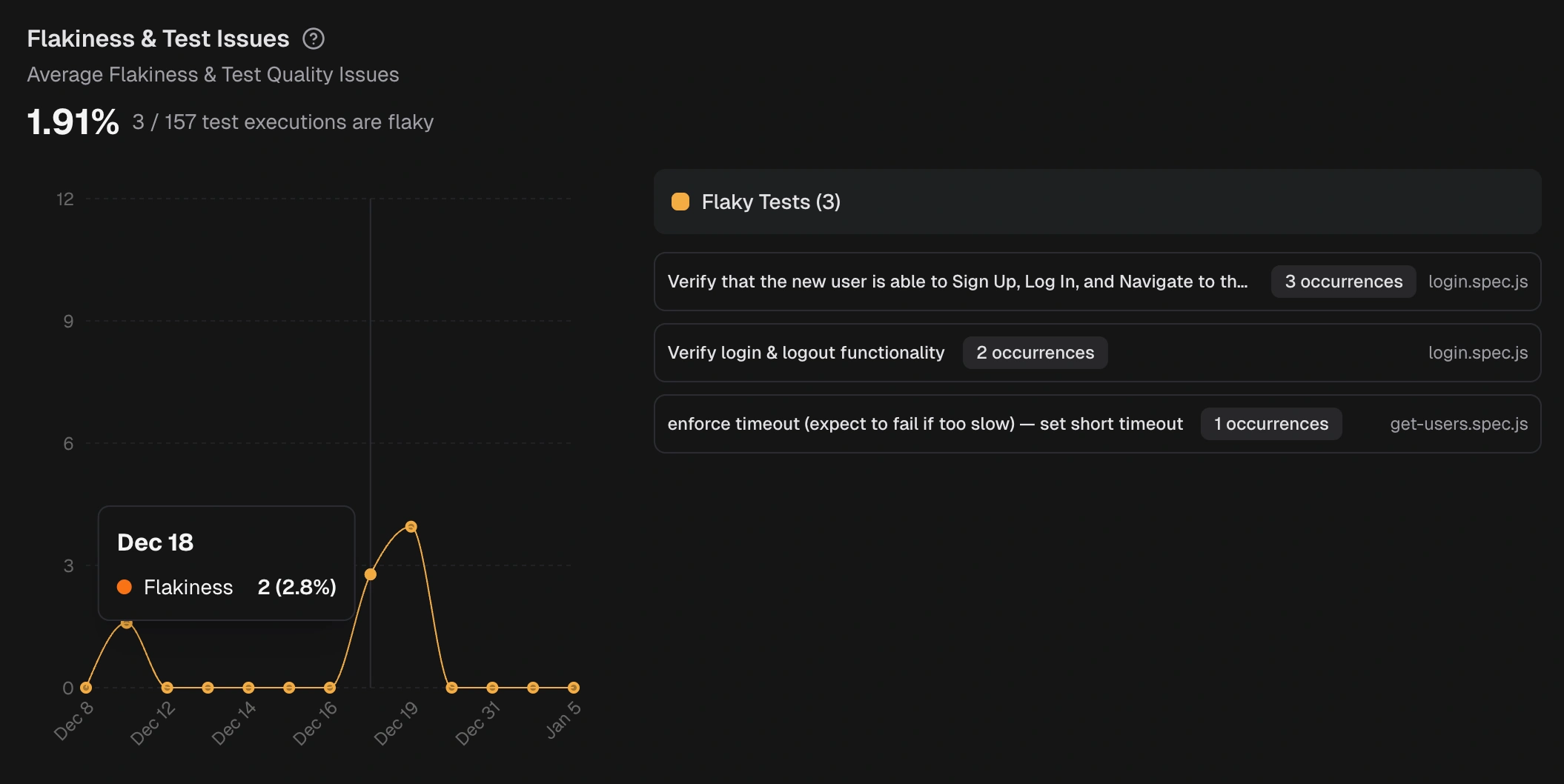

Open Analytics → Summary. The Flakiness & Test Issues chart shows the flaky rate trend over time and a list of flaky tests with spec file and execution date. A rising trend indicates increasing instability in your test suite.

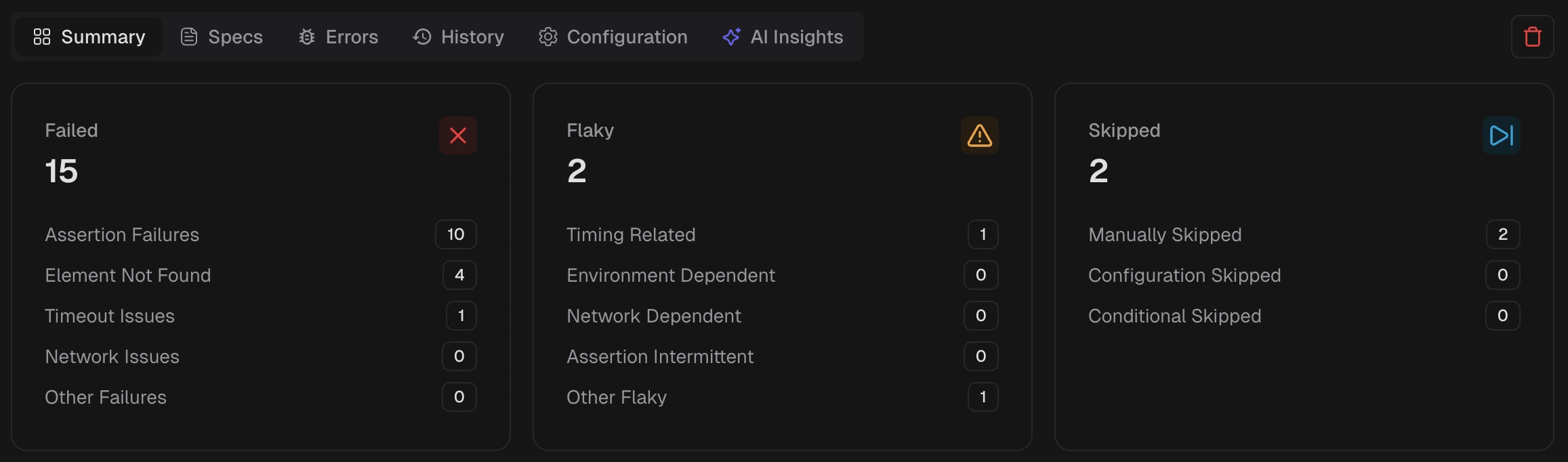

Test Run Summary

Open any test run. The Summary tab shows flaky test counts grouped by category: Timing Related, Environment Dependent, Network Dependent, Assertion Intermittent, and Other Flaky. Click a category to filter the detailed analysis table.

Click a category to filter the detailed analysis table.

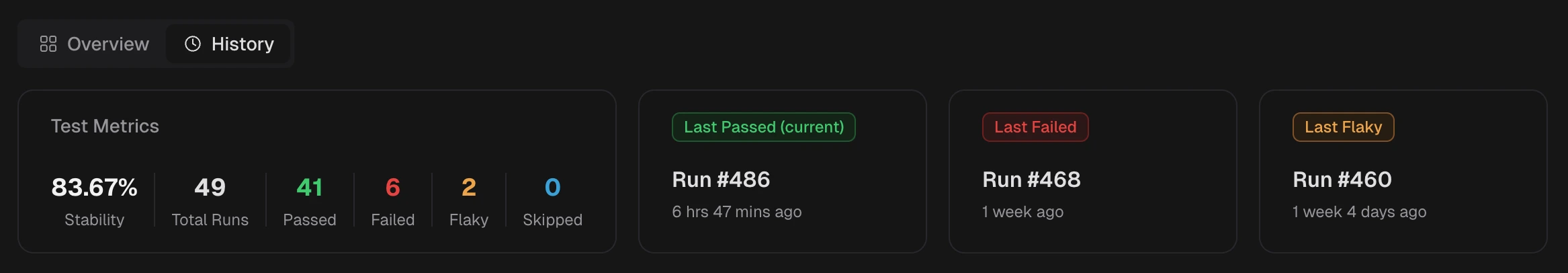

Test Case History

Open a specific test case and go to the History tab. The stability percentage shows how often the test passes: Stability = (Passed Runs / Total Runs) x 100 A test with 100% stability has never failed or been flaky. Any value below 100% indicates inconsistent behavior. The Last Flaky tile links to the most recent run where the test was marked flaky.

Test Explorer

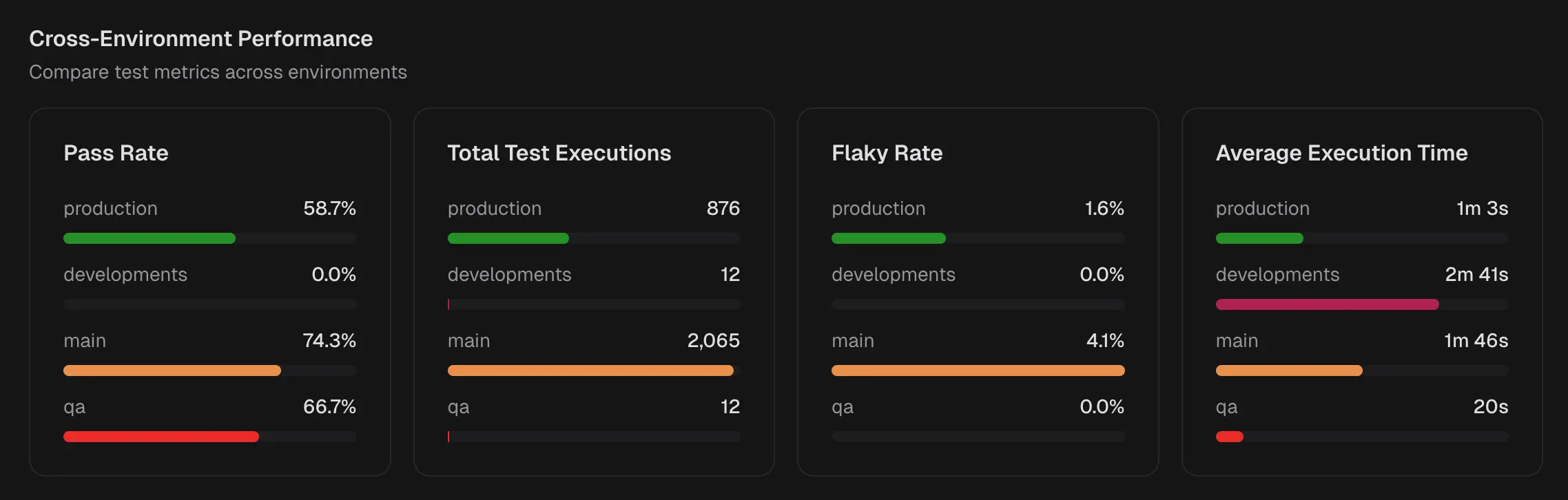

Open Test Explorer from the sidebar. The Flaky Rate column shows the percentage of executions with flaky results for each spec file or test case. Sort by flaky rate to find the most unstable specs. Expand a spec row to see flaky rates for individual test cases, or switch to Flat view to compare across files.Cross-Environment Comparison

Open Dashboard → QA View → Cross-Environment Performance. The Flaky Rate row shows flaky percentages per environment.

High flaky rates in specific environments suggest environment-dependent issues like resource constraints or service availability.

CI Check Behavior

GitHub CI Checks handle flaky tests in two modes:| Mode | Behavior | Use case |

|---|---|---|

| Strict | Flaky tests count as failures | Production branches where stability matters |

| Neutral | Flaky tests excluded from pass rate | Development branches to reduce noise |

Export Flaky Test Data

Use the TestDino MCP server to query flaky tests programmatically:

See TestDino MCP for more details.