KPI Tiles

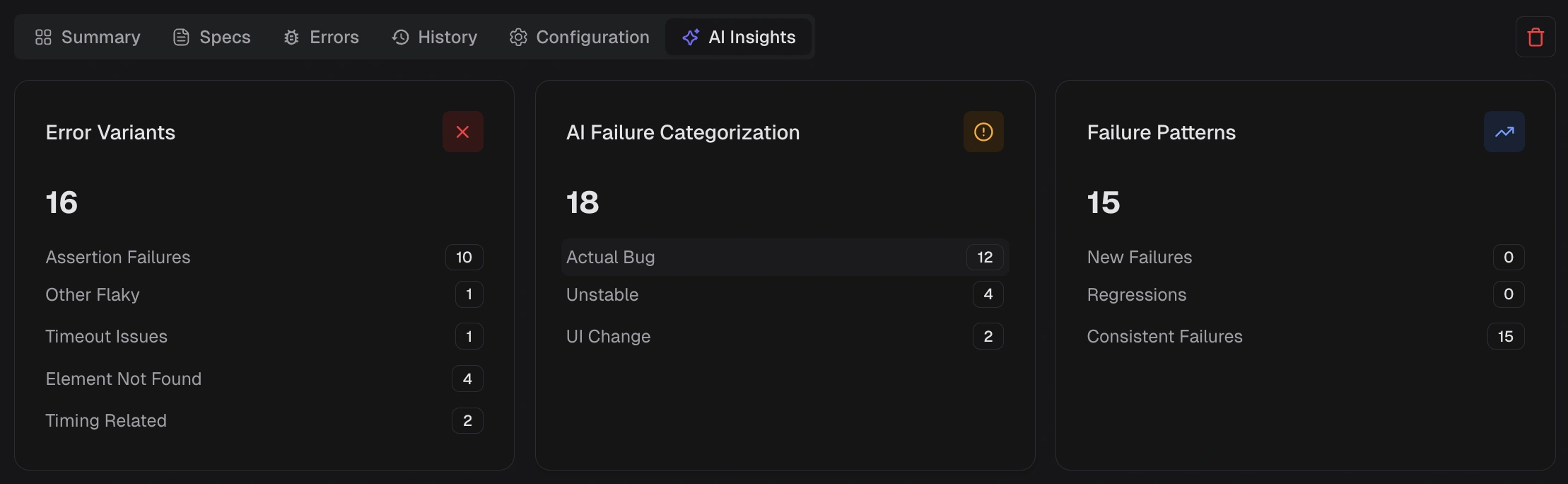

Error Variants

Shows distinct error signatures and how many tests match each variant, for example, timeout, element not found, network error, or timing-related. Use this to identify the most common failure shape in the run.AI Failure Categorization

Shows how failures are classified into:- Actual bug

- UI change

- Unstable

- Miscellaneous

Failure Patterns

Highlights how failing tests behave across recent executions:- New Failures: Tests that started failing within the selected window.

- Regressions: Tests that passed recently but now fail again.

- Consistent Failures: Tests failing across most or all recent runs.

Error Analysis

This table lists failing tests with:- Test case

- Failure category

- Error text

- Error variant

- Duration